4chan and 8chan: everything that resulted from the controversial platforms

In the beginning, there was 4chan

It all started on 4chan, an anonymous English-language imageboard website that was initially created in 2003 by Christopher Poole, who served as the site’s head administrator for more than 11 years before stepping down. At the time, Reddit or Voat didn’t exist yet.

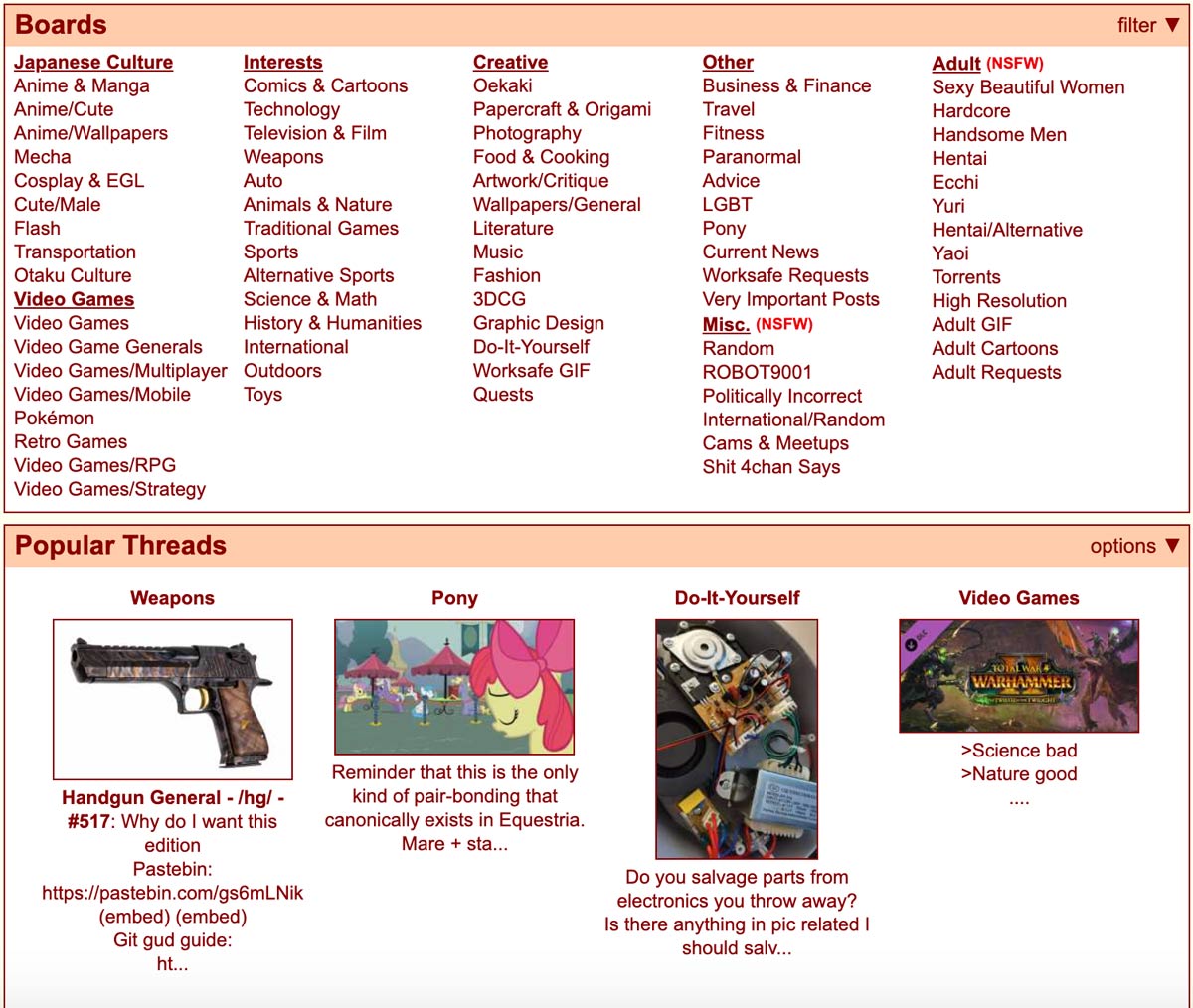

This led 4chan to host a wide variety of ‘boards’ on all sort of topics. From anime and manga to video games, music, literature, fitness, politics, and sports, 4chan offered boards focused on anything and everything—and people loved it!

The platform was created as an unofficial counterpart to the Japanese imageboard Futaba Channel, also known as 2chan, and because of that, its first boards were created for posting images and discussion related to anime.

Before 4chan became the subject of media attention as a source of controversies, it was often described as the hub of internet culture, and its community was said to have an important role in the formation of prominent memes, such as lolcats, Rickrolling, and rage comics, as well as hacktivist movements such as Anonymous—but then, questionable activities started taking place.

First, the site was blamed for leaking the stolen nude photographs of dozens of female celebrities including Jennifer Lawrence and Rihanna, which later became known as The Fappening. After that, 4chan users (who always remain anonymous because registration is not possible on the site) invented Ebola-Chan, a ‘mascot’ for Ebola and encouraged iPhone owners to microwave their phones.

Although the website had around 22 million monthly users in 2014, today, while it still exists, users now tend to go for other platforms like Voat or Reddit. But what made 4chan so special in the first place?

For one thing, users never need to make an account or pick a username. That means that they can say and do virtually anything they want with only the most remote threat of accountability. It also means that they can’t message other users or establish any kind of social relationship with them, unless they reveal their identity in some way. For a social network, that’s a pretty strange way to work.

On top of that, 4chan threads expire after a certain amount of time—R-rated boards, for example, expire in less time than G or PG ones—which means that users rarely see the exact same thing. Few posts last more than a few days before they’re deleted from 4chan’s servers. Posts are ‘organised’ reverse-chronologically and the site’s interface is deliberately minimalist, which can make it difficult for non-regular users to fully get it.

In other words, 4chan is a forum with no names, very few regulations, and even fewer consequences. No wonder things went south. In late 2010, a 4chan user conducted a survey of other site users, which found that most 4chan users didn’t discuss the site offline and most users wouldn’t let their kids join it.

As a result, the site’s founder Poole urged readers to take the survey with “a massive grain of salt.” Since the website thrives on anonymity, he pointed out, there’s ultimately no way to know who uses it with any certainty.

What was born out of 4chan?

1. Celebgate, also known as The Fappening: the leak of stolen celebrity nude photos, which still exist as downloadable torrents across the internet.

2. #Leakforjlaw: a similar social media prank that encouraged women to post their nude photos in support of Jennifer Lawrence.

3. Google and poll-bombing: voting or searching for the same terms en masse, to either sabotage an online vote or make a topic trend artificially. According to The Washington Post, 4chan has successfully gotten a swastika to trend on Google.

4. #Cutforbieber: a Twitter hashtag that encouraged Justin Bieber fans to cut themselves to demonstrate their love for the performer.

5. Gamergate: an ongoing movement to expose ‘corruption’ in video game journalism, which was created by 4chan users. Gamergate has since wrecked the lives of several female gamers and commentators and spawned a larger discussion about the way that industry treats women.

6. Many fake bomb threats: a vast number of hoaxers have posted mass bomb and shooting threats to 4chan, prompting several arrests and evacuations.

7. The cyberbullying of Jessi Slaughter: one of the earliest high-profile incidents of cyberbullying, in which 4chan members sent death threats and calls to an 11-year-old girl who would later make multiple suicide attempts.

8. Apple wave: an alleged feature of the iPhone 6 promoted by 4chan users on Twitter, wherein people can charge their phones by microwaving them. Needless to say, that was nothing but a hoax.

In November 2018, it was announced that 4chan would be split into two, with the work-safe boards moved to a new domain, 4channel.org, while the NSFW boards would remain on the 4chan.org domain.

When did 8chan appear?

Technically, 8chan didn’t replace 4chan. As mentioned previously, 4chan still exists, but after the platform played a considerable role in the Gamegate controversy, it was forced to ban the topic altogether, which resulted in many Gamergate affiliates migrating to 8chan instead.

Just like 4chan, 8chan, also called Infinitechan or Infinitychan (stylised as ∞chan) is an imageboard website composed of user-created message boards. Here again, anonymous users moderate their own board, with minimal interaction from site administration.

8chan was first launched in 2013 by Fredrick Brennan who then handed the keys over to Japanese pornography enthusiast Jim Watkins in 2016. The platform quickly became notorious for its link to white supremacist communities as well as boards promoting neo-Nazism, the alt-right, racism and antisemitism, hate crimes, and mass shootings. 8chan was also known for hosting child pornography; as a result, it was filtered out from Google.

Although 8chan had hundreds of topic areas, the site was most notorious for its /pol/ board, short for politically incorrect.

Around the same time, it was revealed that the man responsible for the mass shooting that took place at a Walmart in El Paso, Texas on 3 August 2019, had posted a racist, anti-immigrant screed on 8chan an hour before where he wrote: “Do your part and spread this brothers!”

It was the third instance this year that a shooter had posted a manifesto to 8chan, but this time, the anonymous image board faced blowback from its service providers, including Cloudflare, which the site used for security, and Tucows, its domain host. Both dropped 8chan as a client. The platform went offline in August 2019, but things weren’t over yet.

In November 2019, 8chan rebranded itself as 8kun and the extreme free speech and anonymity started all over again. While the new 8kun looks identical to 8chan, it presents a few differences. 8kun is currently only accessible from the dark web, meaning that to reach it you need software like Tor, which allows users to browse the web anonymously and reach unindexed websites.

It’s not complicated to download the Tor browser, but it’s still an extra step that makes 8kun harder to find and likely to have fewer visitors than its predecessor, which had millions of users. Unlike 8chan, 8kun doesn’t have a /pol/ board, which is where the alleged perpetrators of the El Paso and Christchurch, New Zealand, massacres (as well as a shooter in Poway, California) first posted their manifestos before opening fire.

To replace one evil with another, Q, the mysterious online figure at the centre of the QAnon conspiracy theory replaced the controversy first created on the now-dead /pol/ board.

QAnon and 8kun

Q posted many messages on 8chan before believers in the QAnon theory spread them over other social networks. QAnon believers are certain that the Mueller investigation was really an effort for President Donald Trump to arrest a vast Democratic paedophile ring.

When 8chan went down, Q’s breadcrumbs stopped too. Without 8chan, Q had no method of proving that its messages were coming from the same Q who had been posting all along. But as soon as 8kun was launched, Q started posting again.

When Watkins testified to Congress in September 2019, he wore a Q pin, making it clear that he is a supporter of the conspiracy theory. Brennan, who initially founded 8chan but is now a vocal critic of the website, alleged in a tweet that “the point of 8kun is Q, full stop. Every other board migrated is just for show.”

The point of 8kun is Q, full stop.

— Fredrick Brennan (@[email protected]) 🦝🔣📗 (@fr_brennan) November 5, 2019

Every other board migrated is just for show. Tom Reidel, President, N. T. Technology admitted to me that their main concern is the QAnon traffic.

Jim doesn't care about you or your board, unless you are QAnon and your board is /qresearch/. https://t.co/qEIOAtTdMi

And although it seems obvious why Q had nowhere else to go, many wondered why the community of white-nationalist trolls that gathered on 8chan for years also moved to 8kun. Many first flocked to Discord, the chat app for gamers that’s also become a popular tool for hate groups to organise, chat, and indoctrinate new followers.

They shared links and invited interlocutors to join other groups across the internet, including on the encrypted messaging app Telegram. Others migrated to new online boards and forums that fit more specifically with their ideological leanings, like NeinChan, which attracts a particularly antisemitic crowd or JulayWorld, which has a small fascist board that has attracted some former 8channers.

But many users started to see 4chan and 8chan’s anonymous message boards as outdated, which led some of them to give 8kun a try. 2019 marked a new era, one where extremists didn’t feel the need to hide as much anymore. They simple moved to Voat, Reddit and YouTube and created more cesspools of hate.