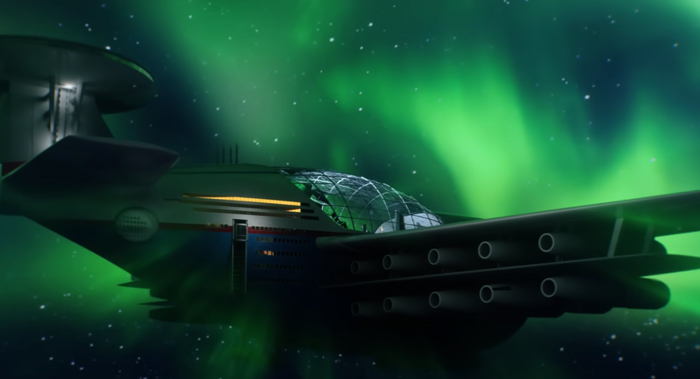

Hotel that never lands could fly up to 5,000 guests for ‘Sky Cruise’

A now-viral video has left the internet astonished after it demonstrated a potential AI-piloted aircraft that would never actually land. Designed by science communicator and video producer Hashem Al-Ghaili, who’s best known for his infographics on scientific breakthroughs, the concept of the ‘Sky Cruise’ is basically a flying hotel that boasts 20 nuclear-powered engines with the capacity to carry up to 5,000 passengers. Let me explain.

Al-Ghaili has billed the aircraft as the “future of transport” and explained that conventional airlines would fly passengers to and from Sky Cruise, which would never touch the ground and have all repairs carried out in-flight. Thanks to its electric engines being powered by nuclear energy, the aircraft would never run out of fuel—though it should also be noted that the clip explained the hotel could “remain suspended in the air for several years,” indicating that at some point, it would need to land.

Reporting on Al-Ghaili’s design, the Daily Star noted that when asked how many pilots it would take to fly the Sky Cruise, he responded, “All this technology and you still want pilots? I believe it will be fully autonomous.”

Sky Cruise would still need an incredible number of onboard staff since it would include a shopping mall, pools, gyms, restaurants, luxurious rooms and even a “big hall that offers a 360-degree view of your surroundings.”

For those of you who have already started listing some of the cons that come with going on a holiday in the sky, here are a few answers to some of your potential worries. If you’ve thought about the risks that come with living above the clouds with no access to medical care if something were to happen to a guest, the video explained that Sky Cruise would not only use AI to predict air turbulences—and obviously avoid them when needed—but the hotel would also feature a facility equipped with the latest technology aimed to keep passengers “safe, healthy and fit.”

While the launch date for Sky Cruise is yet to be announced, netizens are already sharing their opinions on Al-Ghaili’s idea, with one person writing in the comment section below the YouTube video: “Sounds like a disaster waiting to happen. Looks cool anyways.”

“Hilarious! It’s like someone got in a time machine, travelled to 2070, found a retrofuturism video based on our era (as opposed to the 1950s or 1800s) depicting what people from our era thought our future would look like,” wrote another.

“This is literally just the Axiom ad from Wall-e,” one user joked, while another added: “You can draw it. You can hire voice actors. You cannot hide that this is an impossibility.”