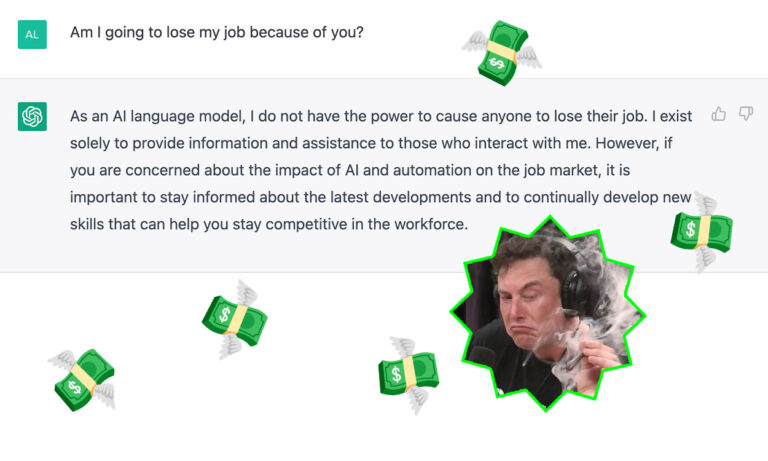

Companies are paying big bucks for people skilled at ChatGPT, and even Elon Musk is worried

On 28 March 2023, The Wall Street Journal published an article titled The Jobs Most Exposed to ChatGPT, which highlighted that “AI tools could more quickly handle at least half of the tasks that auditors, interpreters and writers do now.” A terrifying prospect for many, myself included.

Heck, even Twitter menace Elon Musk is freaking out, having recently signed a letter that warns of potential risks to society and civilisation by human-competitive AI systems in the form of economic and political disruptions. Oh, him and 1,000 other experts, including Apple co-founder Steve Wozniak, and Yoshua Benigo, often referred to as one of the “godfathers of AI.”

The letter, which was issued by the non-profit Future of Life Institute, calls for a six-month halt to the “dangerous race” to develop systems more powerful than OpenAI’s newly launched GPT-4. Of course, no one’s listening.

Despite the general worry those of us working in creative industries are currently feeling about the possibility of ChatGPT and other AI chatbot tools coming to take our jobs, some companies are offering six-figure salaries to a select few who are great at wringing results out of them, as initially reported by Bloomberg.

Considering the current hype the technology is undergoing, as well as the immense potential it holds, it’s not surprising that we’re already witnessing a jobs market burgeoning for so-called “prompt engineer” positions—with salaries of up to $335,000 per annum.

To put it simply, these roles would require applicants to be ChatGPT wizards who know how to harness its power as effectively as humanly possible and who can train other employees on how to use those tools to their best ability. The rest of the work would still be left to the AI.

Speaking to Bloomberg, Albert Phelps, one of these lucky prompt engineers who works at a subsidiary of the Accenture consultancy firm in the UK, shared that the job entails being something of an “AI whisperer.”

He added that educational background doesn’t play as big of a part in this role as it does in countless others, with ChatGPT experts who have degrees as disparate as history, philosophy, and English. “It’s wordplay,” Phelps told the publication. “You’re trying to distil the essence or meaning of something into a limited number of words.”

Aged only 29, Phelps studied history before going into financial consulting and ultimately pivoting to AI. On a typical day at his job, the AI virtuoso and his colleagues will write about five different prompts and have 50 individual interactions with large language models such as ChatGPT.

Though Mark Standen, the owner of an AI, automation, and machine learning staffing business in the UK and Ireland, told Bloomberg that prompt engineering is “probably the fastest-moving IT market I’ve worked in for 25 years,” adding that “expert prompt engineers can name their price,” it should be noted that people can also sell their prompt-writing skills for $3 to $10 a pop.

PromptBase, for example, is a marketplace for “buying and selling quality prompts that produce the best results, and save you money on API costs.” On there, prompt engineers can keep 80 per cent of every sale of their prompt, and on custom prompt jobs. Meanwhile, the platform takes 20 per cent.

But when it comes to the crème de la crème, Standen went on to note that while the jobs start at the pound sterling equivalent of about $50,000 per year in the UK, there are candidates in his company’s database looking for between $250,000 and $360,000 per year.

There’s obviously no way of knowing if or when the hype surrounding prompt engineers will ever die down, in turn lowering the six-figure salaries that are currently being offered to more ‘standard’ rates. But one thing is for sure, AI tools aren’t going anywhere anytime soon, and they’re coming for our jobs.

Time to improve those prompt-feeding skills, I guess.