Meet CLIP Interrogator, the rude AI that bullies people based on their selfies

In 2022, every dawn is ridden by a new controversial AI tool on the internet. Gone are the days netizens leveraged DALL·E 2 and racist image-spewing DALL·E mini to generate silly art for Twitter shitposting. Instead, recent months have witnessed the deployment of these tools to win legit art competitions, replace human photographers in the publication industry, and even steal original fan art in a growing series of ethical, copyright, and dystopian nightmares.

Just when we thought the innovations on the AI generator front may have come to a relative standstill, a new tool is now roasting people beyond recovery solely based on their selfies.

Dubbed CLIP Interrogator and created by AI generative artist @pharmapsychotic, the tool essentially aids in figuring out “what a good prompt might be to create new images like an existing one.” For instance, let’s take the case of the AI thief who ripped off a Genshin Impact fan artist by taking a screenshot of their work-in-progress livestreamed on Twitch, feeding it into an online image generator to “complete” it first, and uploading the AI version of the art on Twitter six hours before the original artist. The swindler then had the audacity to accuse the artist of theft and proceeded to demand credit for their creation.

With CLIP Interrogator, the thief could essentially upload the ripped screenshot and get a series of text prompts that will help accurately generate similar art using other text-to-image generators like DALL·E mini. The process is a bit cumbersome but it opens up a whole new realm of possibilities for AI-powered tools.

On Twitter, however, people are using CLIP Interrogator to upload their own selfies and get verbally destroyed by a bot. The tool called one user a “beta weak male,” a second “extremely gendered with haunted eyes” and went on to dub a third “Joe Biden as a transgender woman.” It also seemed to reference porn websites specifically when hit up with images of females with tank tops. Are we surprised? Not in the least. Disappointed? As usual.

CLIP Interrogator is rude AF

— Mark Riedl (@mark_riedl) October 20, 2022

AI researcher as a muppet, with a punchable face.

Also thanks but no thanks for pointing out my graying hair pic.twitter.com/OMQOpexuZC

just did a CLIP interrogator of one of my shirtless pics and it called me a "tall emaciated man wolf hybrid"

— fareed 🥥🌴#KHive (@it_is_fareed) October 21, 2022

"beta weak male" absolutely DESTROYED by the CLIP interrogator 😭 pic.twitter.com/ORO56BSHxa

— Nick is in KOREA (@ikisnick) October 21, 2022

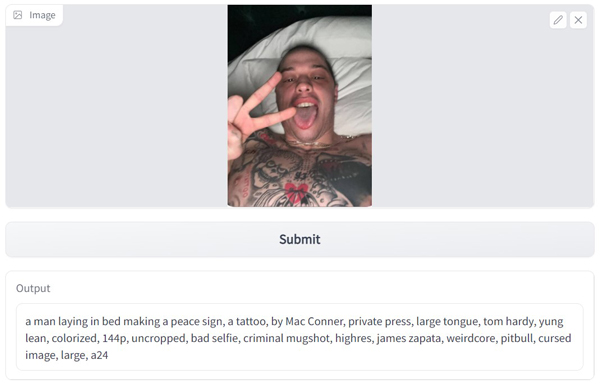

Since I don’t exactly trust an AI with my own selfies (totally not that I can’t handle the blatant roasting or anything), I decided to test the tool by uploading some viral images of public figures. On my list were resident vampire boi Machine Gun Kelly (MGK), his best bud Pete Davidson, and of course selfie afficiendao, Kim Kardashian.

After several refreshes and dragging minutes of “Error: This application is too busy. Keep trying!” I finally got CLIP Interrogator to generate text prompts based on one of MGK’s infamous mirror selfies. “Non-binary, angst white, Reddit, Discord” the tool spat.

Meanwhile, the American rapper’s bud Davidson got “Yung lean, criminal mugshot, weirdcore, pitbull, and cursed image” to name a few. For reference, the picture in question was the shirtless selfie that the Saturday Night Live star took to hit back at Kanye West while he was dating Kim Kardashian. Talking about the fashion mogul, Kardashian’s viral diamond necklace selfie was described by the AI tool as “inspired by Brenda Chamberlain, wearing a kurta, normal distributions, wig.”

As noted by Futurism, CLIP Interrogator is “built on OpenAI’s Contrastive Language-Image Pre-Training (CLIP) neural network that was released in 2021, and hosted by Hugging Face, which has dedicated some extra resources to deal with the crush of traffic.” As the tool remains over-trafficked, further details are hazy at this point.

All we know for sure is that the roast bot has a long way to go when it comes to biases, especially when used by netizens to comment on their own selfies. And given how Twitter has recorded 320 tweets under the search term ‘CLIP Interrogator’ as of today, it seems like the tool is here to stay for a while.

I’m still kind of tech-illiterate so excuse me if I have no idea what I’m talking about, but something about uploading your face to Clip Interrogator feels like a bad idea.

— dee ✧˚•¸♡˚🎀 (@fairyartmother) October 25, 2022