Tinder is using AI to monitor DMs and cool down the weirdos

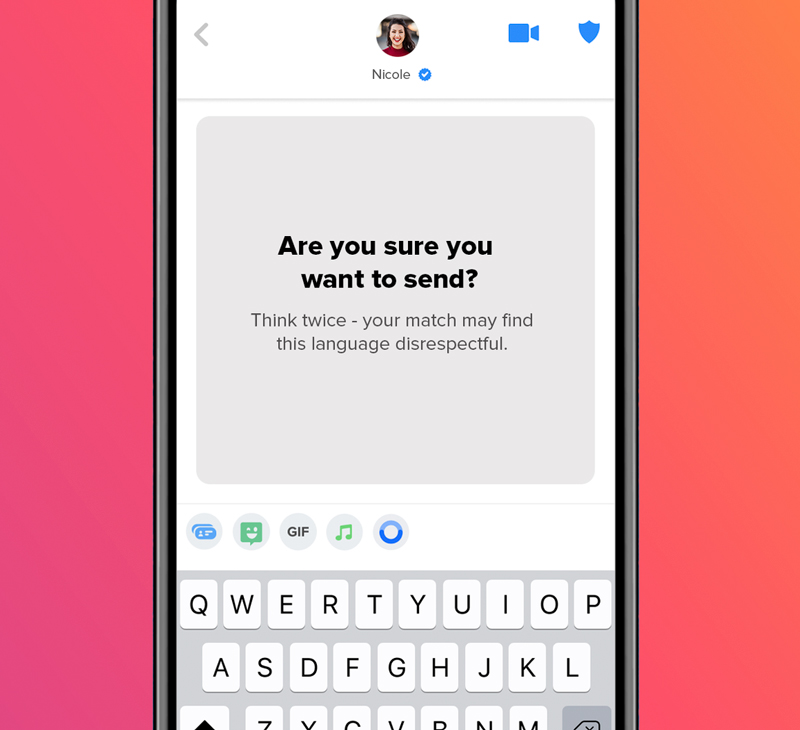

Tinder recently announced that it will soon use an AI algorithm to scan private messages and compare them against texts that have been reported for inappropriate language in the past. If a message looks like it could be inappropriate, the app will show users a prompt that asks them to think twice before hitting send. “Are you sure you want to send?” will read the overeager person’s screen, followed by “Think twice—your match may find this language disrespectful.”

In order to bring daters the perfect algorithm that will be able to tell the difference between a bad pick up line and a spine-chilling icebreaker, Tinder has been testing out algorithms that scan private messages for inappropriate language since November 2020. In January 2021, it launched a feature that asks recipients of potentially creepy messages “Does this bother you?” When users said yes, the app would then walk them through the process of reporting the message.

As one of the leading dating apps worldwide, sadly, it isn’t surprising why Tinder would think experimenting with the moderation of private messages is necessary. Outside of the dating industry, many other platforms have introduced similar AI-powered content moderation features, but only for public posts. Although applying those same algorithms to direct messages (DMs) offers a promising way to combat harassment that normally flies under the radar, platforms like Twitter and Instagram are yet to tackle the many issues private messages represent.

On the other hand, allowing apps to play a part in the way users interact with direct messages also raises concerns about user privacy. But of course, Tinder is not the first app to ask its users whether they’re sure they want to send a specific message. In July 2019, Instagram began asking “Are you sure you want to post this?” when its algorithms detected users were about to post an unkind comment.

In May 2020, Twitter began testing a similar feature, which prompted users to think again before posting tweets its algorithms identified as offensive. Last but not least, TikTok began asking users to “reconsider” potentially bullying comments this March. Okay, so Tinder’s monitoring idea is not that groundbreaking. That being said, it makes sense that Tinder would be among the first to focus on users’ private messages for its content moderation algorithms.

As much as dating apps tried to make video call dates a thing during the COVID-19 lockdowns, any dating app enthusiast knows how, virtually, all interactions between users boil down to sliding in the DMs. And a 2016 survey conducted by Consumers’ Research has shown a great deal of harassment happens behind the curtain of private messages: 39 per cent of US Tinder users (including 57 per cent of female users) said they experienced harassment on the app.

So far, Tinder has seen encouraging signs in its early experiments with moderating private messages. Its “Does this bother you?” feature has encouraged more people to speak out against weirdos, with the number of reported messages rising by 46 per cent after the prompt debuted in January 2021. That month, Tinder also began beta testing its “Are you sure?” feature for English- and Japanese-language users. After the feature rolled out, Tinder says its algorithms detected a 10 per cent drop in inappropriate messages among those users.

The leading dating app’s approach could become a model for other major platforms like WhatsApp, which has faced calls from some researchers and watchdog groups to begin moderating private messages to stop the spread of misinformation. But WhatsApp and its parent company Facebook haven’t taken action on the matter, in part because of concerns about user privacy.

An AI that monitors private messages should be transparent, voluntary, and not leak personally identifying data. If it monitors conversations secretly, involuntarily, and reports information back to some central authority, then it is defined as a spy, explains Quartz. It’s a fine line between an assistant and a spy.

Tinder says its message scanner only runs on users’ devices. The company collects anonymous data about the words and phrases that commonly appear in reported messages, and stores a list of those sensitive words on every user’s phone. If a user attempts to send a message that contains one of those words, their phone will spot it and show the “Are you sure?” prompt, but no data about the incident gets sent back to Tinder’s servers. “No human other than the recipient will ever see the message (unless the person decides to send it anyway and the recipient reports the message to Tinder)” continues Quartz.

For this AI to work ethically, it’s important that Tinder be transparent with its users about the fact that it uses algorithms to scan their private messages, and should offer an opt-out for users who don’t feel comfortable being monitored. As of now, the dating app doesn’t offer an opt-out, and neither does it warn its users about the moderation algorithms (although the company points out that users consent to the AI moderation by agreeing to the app’s terms of service).

Long story short, fight for your data privacy rights, but also, don’t be a creep.