Tinder introduces ID verification feature to tame catfishing

So you’ve matched with someone on Tinder, the world’s most popular dating app, and you’ve been chatting for a while—things are looking pretty promising—but the person on the other end sounds almost too good to be true and refuses to come on video calls. As someone whose childhood has been synonymous with MTV’s Catfish and that one emotional episode of Dr. Phil, the gears in your head start churning and you automatically begin losing trust in your match. You then spend countless nights swiping, matching with more shady users and eventually losing interest—all in the hopes of not being reeled in by a catfisher. Enter Tinder’s Identity Document (ID) verification system and the promise of authentic matches.

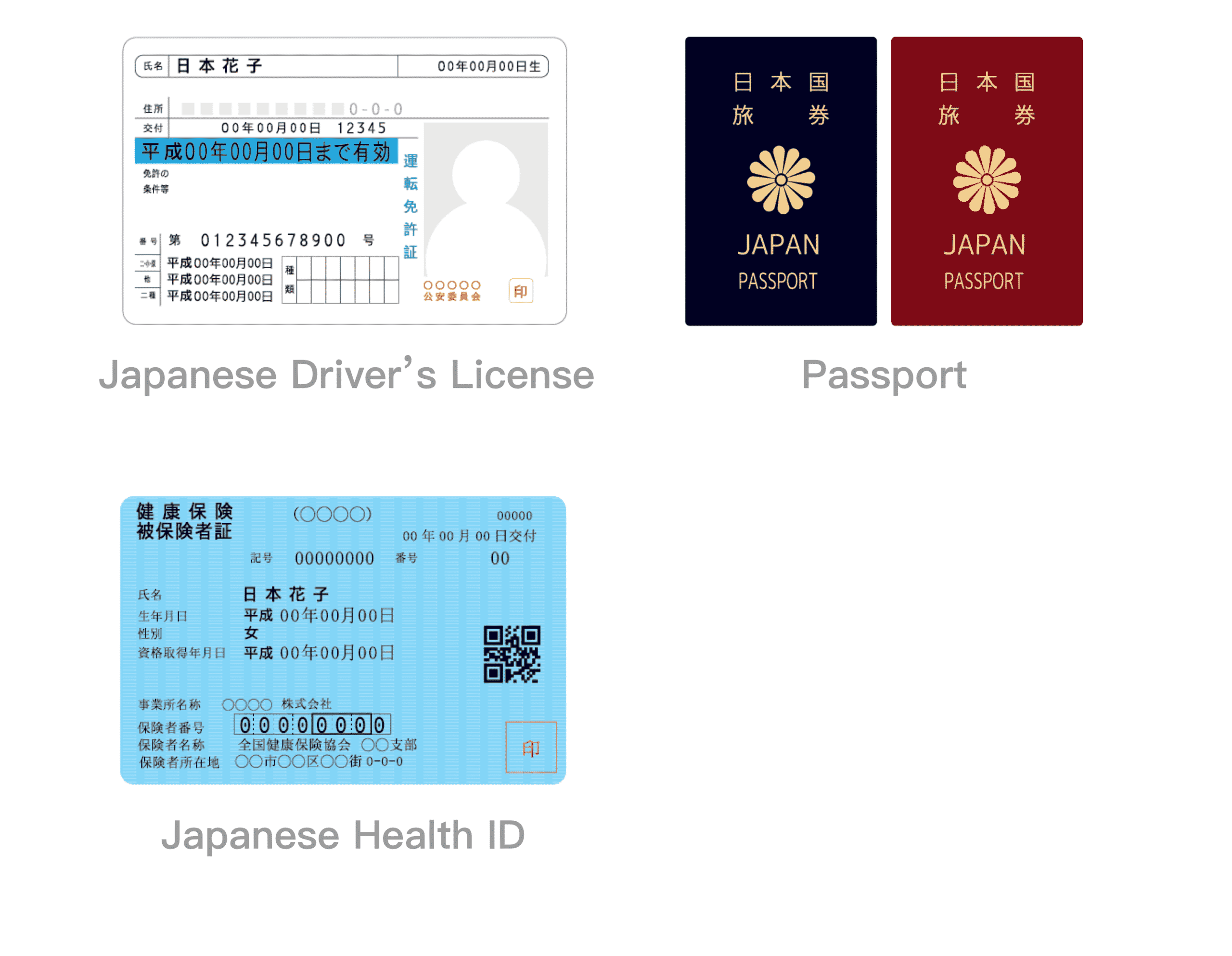

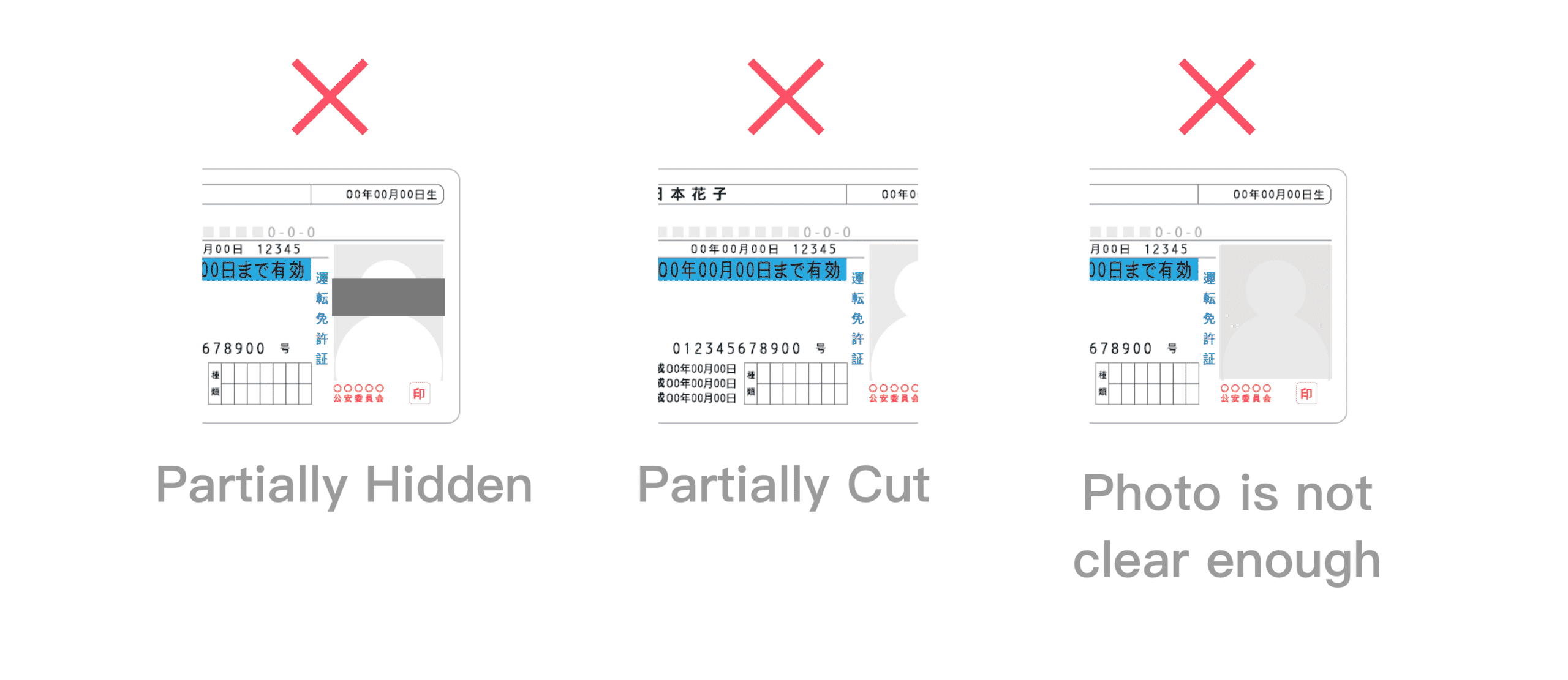

First rolled out in Japan in 2019, Tinder’s age verification model requires members to be at least 18 years old to sign up. Users based in Japan had to upload a clear picture of either their passport, driver’s licence or health ID, which would then be reviewed and approved for them to start chatting with their matches. In its latest announcement, the dating app seeks to implement ID verifications globally in the coming quarters.

Tinder will take expert recommendations and inputs from its members into consideration and review documents required in each country—along with the laws and regulations—to determine how the feature will roll out. The feature will be introduced as a voluntary option, except where mandated by law. Based on the inputs received, Tinder will then evolve its model to ensure “an equitable, inclusive and privacy-friendly approach to ID verification.”

“ID verification is complex and nuanced, which is why we are taking a test-and-learn approach to the rollout,” said Rory Kozoll, Head of Trust & Safety Product at Tinder, in the press release. “We know one of the most valuable things Tinder can do to make members feel safe is to give them more confidence that their matches are authentic and more control over who they interact with.”

Tinder already has a photo verification feature within the app—where users can verify themselves by taking a selfie. The feature then compares the selfie with other photos that the user has uploaded to add a Twitter-like blue check to their profile. The new ID verification model seeks to be yet another badge of assurance.

According to its terms of use, the dating app requires users to “have never been convicted of or pled no contest to a felony, a sex crime, or any crime involving violence, and that you are not required to register as a sex offender with any state, federal or local sex offender registry.” A Tinder spokesperson told TechCrunch that the company will use the ID verification model to further cross-reference data like the sex offender registry in regions where that information is accessible. The company also does this via a credit card lookup when users sign up for a subscription.

With dating apps like Bumble, Zoosk and Wild already embedding ID verifications into their sign up process, such models might just redefine online dating altogether—with verified users having a better chance of landing a date. However, Tinder has no plans of mandating it anytime soon, given the fact that some users actually want to protect their identities online.

“We know that in many parts of the world and within traditionally marginalised communities, people might have compelling reasons that they can’t or don’t want to share their real-world identity with an online platform,” said Tracey Breeden, VP of Safety and Social Advocacy at Match Group, in the press release. “Creating a truly equitable solution for ID Verification is a challenging, but critical safety project and we are looking to our communities as well as experts to help inform our approach.”

Tinder is undoubtedly the leader in safety innovation when it comes to online dating, from the Swipe feature based on mutual consent to photo verification, Noonlight, and face-to-face video chats. This new feature, however, comes with a typical downside: privacy concerns. Will users have to surrender their sensitive information just to date others? How will Tinder use this data and how can it guarantee that it won’t be sold to third parties—or worse—hacked into and stolen?

Although we’re living in the future with groundbreaking advancements in artificial intelligence, certain biases cannot be ignored when it comes to verification models. After all, children as young as 13 were once tricking these systems and setting up accounts on Onlyfans using fake documents of their older relatives. If Tinder manages to pull this off, it can guarantee what most online dating apps merely claim: “who you’ll like is who you’ll meet.”

“We hope all our members worldwide will see the benefits of interacting with people who have gone through our ID verification process,” Kozoll added. “We look forward to a day when as many people as possible are verified on Tinder.”