TikToker House of Dvey explains how queerbaiting invalidates LGBTQIA+ stories on the app

Some would argue that Harry Styles’ December Vogue cover was one of the few saving graces we received during the infamous year of 2020. Not only did it break the internet, but it also brought to light the deep and complicated subjects of gender norms, white privilege, appropriation and subsequently a noticeable trend of queerbaiting across social media. This is not a commentary of Harry Styles himself or his sexuality but an observation of the culture and consequences of rewarding certain people for breaking barriers and demonising others for doing the same.

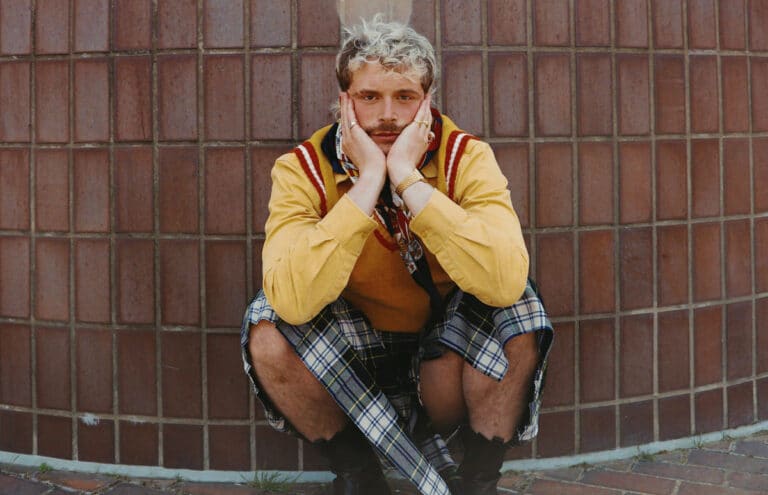

I had the privilege of sitting down with TikTok star Reece Davey otherwise known as @HouseofDvey to hear his thoughts on the matter. With nearly 90,000 TikTok followers under his belt, Davey has become a champion of queer identity, self-expression and fashion freedom. He seems to share similar concerns when it comes to queerbaiting, “As amazing as it is that Harry Styles is on the cover of magazines in a dress, unfortunately, if we are relying on that to end homophobia then we are in big trouble.”

Although this blurring of gender within clothing is a wonderful thing, it comes with its complications. When history is forgotten, the importance of a movement is diminished and subsequently appropriated. This is what a lot of people refer to as queerbaiting. Davey defines this term as “the use of queer coded clothing or mannerisms by straight people or sexually ambiguous individuals without understanding or supporting the communities and people that created and pioneered them.”

For example, race cannot be ignored when dealing with gender and sexuality; it was fairly pointed out in November 2020 that Harry Styles, a white man, has been celebrated as the champion of a movement he did not start. For Davey, “it’s been happening for a while but even looking back as far as the 90s and 00s there have always been people, especially straight men, who have been praised for doing things that queer people have been championing for years before.”

This disparity is becoming ever more clear in the social media landscape, especially in the TikTok realm. There has been a huge increase online of cisgender heterosexual presenting individuals donning pearl necklaces, nail polish and breaking traditional gender norms in fashion. Perhaps one of the most notable examples that occurred was the maid dress trend. The same applies to femboys as well as soft boys.

TikTok influencer House of Dvey has noticed this increase, “It is definitely to do with the ‘soft boy’ aesthetic being so popular on apps like TikTok.” While this sounds incredibly positive, it can also have harmful consequences that invalidate LGBTQIA+ stories. From coming out ‘pranks’ to sexualising queer intimacy for views, the toxic rise of queerbaiting online commodifies queerness and does little to help those who actually belong to the community. For Davey, it’s not a short-lived trend but the history of his identity, “a PSA to non-queer people who are wearing the clothes that so many people fought and died for. Make sure you understand why they did that and the history that those flares, baby blue nails and pearl necklaces have.”

Unfortunately for many, they don’t. This goes back to Davey’s definition of what queerbaiting means to him. The trending of his real identity has been used as a way for non-queer people to secure views, likes and marketability. As we near Pride month, the influencer offers us to take a more critical look at its rainbow-branded capitalism, “look around Oxford Street in June. Everything is a rainbow, being queer is very profitable for companies but after June, say ciao to any of that same pride.”

This diminishes the experiences that so many of the LGBTQIA+ community face throughout their lives. The obstacles and hardships that huge numbers have had to overcome are watered down to merely aesthetic trends. Davey is unfortunately no stranger to these oppressive binaries, “We are constantly fighting for our identities. I think that’s why it’s so hard to see people who haven’t had these experiences or these traumas walk so freely in our shoes.”

What can offer us some solace in these times are queer influencers like Davey continuing to curate an authentic presence online. There is no better way to end this article than with one of his most important statements made during our conversation, “Please dress as flamboyant as you can but while you’re at it share a GoFundMe for homeless queer youth or sign a petition for trans people’s right to healthcare. Watch a movie about the AIDS epidemic, about the Stonewall riots or the ballroom scene of New York in the 80s. There is so much more to take from the queer community than a cute bag or earring. Just remember where you got it from.”