On LinkedIn, companies are leveraging deepfakes’ trustworthiness to boost sales

How would you describe a typical corporate headshot of someone on LinkedIn? A close-cropped image of the person with a slightly stiff smile, slicked hair and blurred background? Well, that’s exactly what Renée DiResta of the Stanford Internet Observatory thought when she received a connection request from another user.

“Quick question—have you ever considered or looked into a unified approach to message, video, and phone on any device, anywhere?” the sender, Keenan Ramsey, wrote in the message—mentioning that she and DiResta both belonged to a LinkedIn group for entrepreneurs. Ramsey additionally punctuated her greeting with a grinning face emoji before moving on to a pitch about software. Nothing suspicious here so far, just another corporate spam that you either fall for and take the bait or ignore entirely.

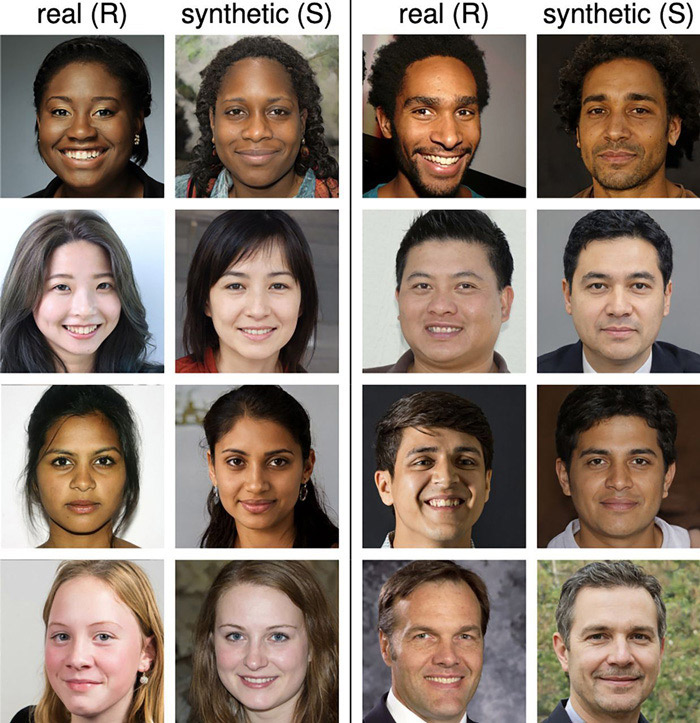

But this wasn’t the case for DiResta’s trained eyes. For starters, Ramsey was wearing only one earring in her profile picture. While some strands of her hair blended into the blurry background—which, upon closer inspection, looked like nothing in particular—others disappeared and then reappeared. Then came the placement of her eyes. DiResta noted that they were aligned right in the middle of the image, a tell-tale sign of an AI-generated deepfake.

But…RingCentral doesn’t have any record of an employee named Keenan Ramsey. NYU says no one named Keenan Ramsey has received any undergraduate degree.

— Shannon Bond 📻 (@shannonpareil) March 27, 2022

And the biggest red flag? Her face appears to have been created by artificial intelligence.https://t.co/TyoBp2qxIP pic.twitter.com/o9ew9IM3ml

“The face jumped out at me as being fake,” DiResta, who has studied Russian disinformation campaigns and anti-vaccine conspiracies in the past, told NPR. “In the course of my work, I look at a lot of these things, mostly in the context of political influence operations,” she mentioned. “But all of a sudden, here was a fake person in my inbox reaching out to me.” DiResta also noted how Ramsey’s profile featured a run-of-the-mill description of RingCentral, the software company where she claimed to work, along with a brief job history. She also had an undergraduate business degree from New York University listed on her profile with a generic list of interests in CNN, Unilever, Amazon and Melinda Gates.

These insights led DiResta and her colleague Josh Goldstein at the Stanford Internet Observatory to launch a full-blown investigation into the purpose and harm triggered by deepfakes infiltrating professional platforms like LinkedIn. The result of the study? The researchers uncovered more than 1,000 profiles using AI-generated faces, belonging to more than 70 different companies on the platform.

When NPR further investigated the matter, the media outlet found that most of these profiles are used to drum up sales for the companies that they claim to work for. “Accounts like Keenan Ramsey’s send messages to potential customers. Anyone who takes the bait gets connected to a real salesperson who tries to close the deal,” NPR noted. “Think telemarketing for the digital age.”

Some of the major incentives for companies who have turned to fake profiles include the tactic’s potential of reaching more customers without beefing up their own workforce or hitting LinkedIn’s limits on messages. From a business perspective, it’s undoubtedly cheaper to make fake social media accounts with AI-generated faces than hire actual people to make real accounts. Plus, the images are proven to be more convincing than real people anyways.

NPR further highlighted how the demand for online sales leads has exploded over the pandemic—given how it has become harder for teams to pitch their products in person. However, what’s shocking is that among the 70 businesses that were listed as employers on these fake profiles, several told NPR that they had hired external vendors to help with sales. The companies also claimed to not have authorised the use of computer-generated images and were surprised to learn about the same.

“This is not how we do business,” Heather Hinton, RingCentral’s chief information security officer told NPR. “This was for us a reminder that technology is changing faster than even those of us who are watching it can keep up with. And we just have to be more and more vigilant as to what we do and what our vendors are going to do on our behalf.”

Robert Balderas—CEO of Bob’s Containers in Texas—on the other hand, admitted that the company hired a firm named airSales to boost its business. Although Balderas knew airSales was creating LinkedIn profiles for people who described themselves as “business development representatives” for his company, he thought that “they were real people who worked for airSales.” However, Bob’s Containers had stopped working with airSales before NPR inquired about the profiles.

After Stanford researchers DiResta and Goldstein alerted LinkedIn about the marketing practice, the platform investigated the concern and has since removed profiles that have broken its policies—including rules against creating fake profiles or falsifying information.

“Our policies make it clear that every LinkedIn profile must represent a real person. We are constantly updating our technical defences to better identify fake profiles and remove them from our community, as we have in this case,” LinkedIn spokesperson Leonna Spilman said in a statement to NPR. “At the end of the day it’s all about making sure our members can connect with real people, and we’re focused on ensuring they have a safe environment to do just that.”

As for the consumer-focused companies in question, this marketing tactic—be it intentional or not—is bound by the trust they seek to build with their audience. So as the deepfake technology that is currently being used to propagate misinformation and harassment online makes its way into the corporate world, remember: the eyes, Chico, they never lie.