The dark side of AI-generated music: How TikTok’s obsession with fake Drake songs could harm the industry

Artificial intelligence has officially infiltrated our health care, education and daily lives. But with its recent stride in popular music, the question remains: How will the music industry be impacted by AI?

Four years ago, pop star Grimes boldly predicted on American theoretical physicist and philosopher Sean Carroll’s Mindscape podcast that once Artificial General Intelligence (AGI) goes live, human art will be doomed.

“They’re gonna be so much better at making art than us,” she expressed. Although those comments sparked a meltdown on social media, as AI had already upended many blue-collar jobs across several industries, as first claimed by TIME. There is no denying that the controversial singer had foreshadowed today’s climate.

@altegomusic so artificial intelligence is making viral hits now? 💀 #ai #artificialintelligence #blingbling

♬ original sound - ALTÉGO

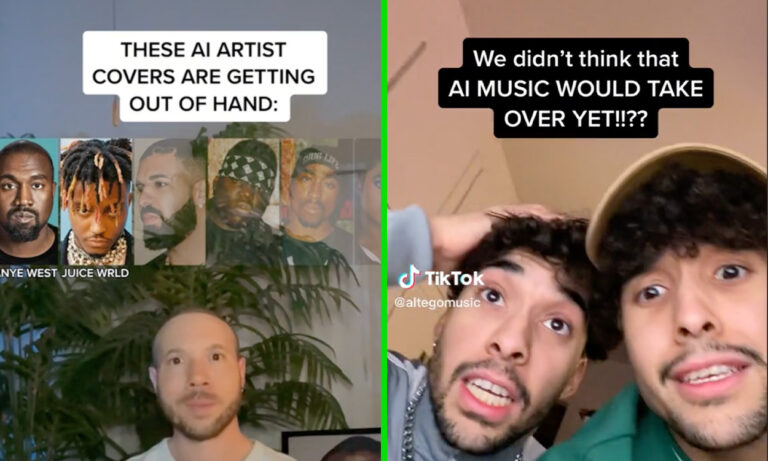

From popular DJ David Guetta using the technology to add a vocal in the style of rapper Eminem to a recent song, to the TikTok famous twin DJs ALTÉGO using it to make an anthem in the style of Charli XCX, Ava Max and Crazy Frog—the world simply can’t get enough of AI, with some of us even coming dependent on it:

In fact, Grimes (who is highly recognised for shaking up the music industry) has publically invited fellow musicians to clone her voice using AI to create new songs. According to the BBC, she announced that she would split 50 per cent of royalties on any successful AI-generated song and tweeted to her over 1 million followers: “Feel free to use my voice without penalty.” However, not all musicians feel as enthusiastic about the changing industry.

Rapper Drake expressed great displeasure over an AI-generated cover of him rapping Ice Spice’s ‘Munch (Feelin’ U)’ and vocalised that this was “the final straw.” Shortly after, Universal Music, which he is signed under, successfully petitioned streaming services to remove a song called ‘Heart on my sleeve’ which used deepfaked vocals of him and The Weeknd. They argued that “the training of generative AI using our artists’ music” was “a violation of copyright law.”

A growing number of AI researchers have vocalised and warned that its capability of automating specific tasks, algorithm influence and healthcare contribution has not only created a powerful and hopeful future but also a dangerous one, potentially filled with misinformation.

So much so that an open letter signed by dozens of academics from across the world—including tech billionaire and now owner of Twitter, Elon Musk—has called on developers to learn more about consciousness, as AI systems become more advanced. So, could the development of AI be the end of creative originality?

“It hasn’t affected my way of creating because I try to create outside the box,” Jordain Johnson, aka Outlaw the Artist, a British-born but LA-based rapper and songwriter, told SCREENSHOT. “I work with international artists so my sound is innovative within itself. For instance, my song ‘Slow you down’ mixes drum and bass and G-funk, so it’s a lot of different energies that can’t be mimicked by AI.”

Outlaw argues that AI could be a cost-free marketing tactic for up and coming artists trying to reach more listeners. Advertising, features, music videos can all be taken care of thanks to Artificial Intelligence. While the rapper remains hopeful that the new technology won’t affect his art, it is an industry that is moving at breakneck speeds and is already on a dangerous trajectory. The music industry is strained enough, and the introduction of a tool that lacks nuance, spontaneity, and the emotional touch that only humans can provide will only strain it further.

According to Verdict, AI-generated music will never be able to gather a mass following because it lacks emotional intelligence—human creativity and ambition is quickly being diluted by our technological advancements, with little signs of a slow down. “Music is often considered a reflection of the times,” so for this reason “the most compelling case for AI music is to serve as a companion to human musicians, catalysing the creative process.”

In agreement, Outlaw describes it as a natural progression: “We moved from analogue to digital, from LimeWire to SoundCloud, and most notably, from CDs to online streaming. It’s forever evolving but it’ll always have a nostalgic feel to it. I’m always looking and thinking about the next thing so AI is a tool. Some tools are sharp and could cut you, but they’re always useful.” But what happens when the tools stop needing a smith?