Elon Musk fans want to throw children in front of Tesla after its autopilot allegedly ran over kid-sized dummies

If you’re someone who keeps up with Elon Musk’s unchecked Twitter obsession, you might’ve noticed how Tesla fanboys have evolved into a full-blown subculture over time—initiating toxic slews of attacks against others who don’t fancy themselves on the “bleeding edge of innovation” or make the same “planet-friendly” choice as they did. Heck, they’d even go to ridiculous lengths just to prove their point.

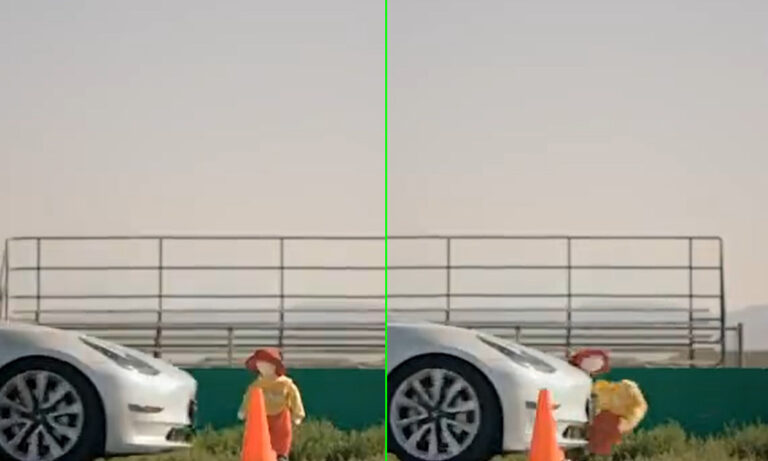

Such is the case with Twitter user Taylor Ogan, who first uploaded a video of a Tesla Model Y and a Lexus RX performing a side-by-side test to see if their autopilot systems would detect a defenceless, child-sized mannequin on the road and slam its brake early enough to avoid running it over.

As claimed by the results of the video, the Tesla flunked the test by bulldozing into the fake child and yeeting its body like a bowling pin before coming to a complete stop. “It’s 2022, and Teslas still aren’t stopping for children,” Ogan captioned the resultant post, mentioning how the Lexus RX that stopped for the dummy is equipped with LiDAR—a technology Tesla Motors CEO Musk still refuses to use for self-driving.

“LiDAR is the same technology used in autonomous vehicles, which Tesla incidentally does not have,” Ogan went on to explain.

It’s 2022, and Teslas still aren’t stopping for children. pic.twitter.com/GGBh6sAYZS

— Taylor Ogan (@TaylorOgan) August 9, 2022

The Guardian also reported on a recent safety test conducted by the Dawn Project, where the latest version of Tesla Full Self-Driving (FSD) Beta software repeatedly ran over a stationary, child-sized mannequin in its path. The claims issued by the advocacy group form part of an ad campaign urging the public to pressure Congress to ban Tesla’s autopilot technology.

“Elon Musk says Tesla’s FSD software is ‘amazing’. It’s not. It’s a lethal threat to all Americans,” founder of the Dawn Project, Dan O’Dowd, told the publication. “Over 100,000 Tesla drivers are already using the car’s FSD mode on public roads, putting children at great risk in communities across the country.”

While footage from both tests has left the public disturbed, The Guardian noted how Tesla has earned a reputation for hitting back at claims that its autopilot is too “underdeveloped” to guarantee the safety of either the car’s occupants or pedestrians on the road. “After a fiery crash in Texas in 2021 that killed two, Musk tweeted that the autopilot feature, a less sophisticated version of FSD, was not switched on at the moment of collision,” the publication highlighted.

Our new safety test of @ElonMusk’s Full Self-Driving Teslas discovered that they will indiscriminately mow down children.

— Dan O'Dowd (@RealDanODowd) August 9, 2022

Today @RealDawnProject launches a nationwide TV ad campaign demanding @NHTSAgov ban Full Self-Driving until @ElonMusk proves it won’t mow down children. pic.twitter.com/i5Jtb38GjH

Now, it seems that Tesla fanboys—or ‘Tessies’ as some netizens call them—are hell-bent on defending their favourite auto maker’s honour. Taking to Twitter, most of them argue that, as the “children” involved were not made of flesh and blood but rather of cardboard and stuffing, the test did not prove if a Tesla would stop in front of an actual human child.

“Such a pointless test. Watch FSD beta. It registers every person in the vicinity and if anything, is overly cautious,” a user commented. “It has never hit anybody despite 40 million miles driven. 40 million. This is a fake, useless, non ‘real world’ test.”

Does this look like cardboard? pic.twitter.com/IrzIh3pYXz

— Taylor Ogan (@TaylorOgan) August 9, 2022

“This is staged. You’ve never said one positive thing about Tesla, ever and invest in LiDAR,” a second wrote, while a third wildly admitted: “If I’m pressing the accelerator, the car should do what I tell it to do no matter what. It shouldn’t matter if there’s 100 children in front of the car, it shouldn’t override the driver’s manual input under any circumstances. So, BS test.”

The Dawn Project’s Twitter post also sported similar criticism, with one netizen stating, “Do you really think that the Americans are so stupid to believe in this staged video? I bet the driver was pressing on the accelerator pedal and the operator deliberately didn’t film the bottom of the Tesla screen so the viewers wouldn’t see a warning about it.”

Nice defamation campaign against tesla. I am a FSD user and i have been using it in crowded areas with lots of people and the car stops for every person. This ad is misleading and built based off lies.

— Ricky Thach (@Ricky_Thach) August 12, 2022

According to most enthusiasts, the driver seated behind the wheel in Dawn Project’s safety test does not actually engage FSD as the sidelines on the screen were still grey in colour when recorded on camera. “When FSD is engaged, the lines turn blue,” a user explained. On 11 August 2022, The Guardian also updated the headline of their article to indicate that the Dawn Project’s test results were “claimed by the group, and have not been independently verified.”

Nevertheless, as soon as someone commented “but that’s a fake child. I bet it would work if it was a real child,” Tesla fanboys got to work, with one Twitter user risking it all to prove that the world’s leading electric car would stop for an actual child.

“Is there anyone in the Bay Area with a child who can run in front of my car on Full Self-Driving Beta to make a point? I promise I won’t run them over… (will disengage if needed),” @WholeMarsBlog tweeted, adding that it was a “serious request.”

“This is completely safe as there will be a human in the car,” the user continued, later updating their followers with: “Okay someone volunteered… They just have to convince their wife.” I wish this was a joke. But the enthusiast then detailed a list of instructions that will be followed during the test, including the fact that the child’s father would be seated behind the wheel.

Given the fact that the autopilot claims are yet to be verified and could well be another “smear campaign” against Tesla, as labelled by enthusiasts, it’s justified to note how the insights put forth by the advocacy group are not 100 per cent believable at this point. That being said, using living and breathing children as test subjects to prove a point is never a good idea.

As Andrew J. Hawkins penned in his open letter published by The Verge, “A lot of kids die in car crashes every year, but the numbers are going down. Let’s keep it that way.”

— James Lloyd (@jamesplloyd) August 10, 2022