Meta’s new AI can deceive and beat humans at a classic board game

After art, music, and politics, it seems like AI is now on a mission to conquer arcade and board games. To date, computers have aced chess, Go, Pong, and Pac-Man. In fact, during the COVID-19 pandemic, AI-powered board games also proved as a helpful tool for reducing social isolation in older adults.

Now, after building a bot that outbluffs humans at poker, scientists over at Meta have created a program that is capable of embarking on a more complex gameplay—one that can strategise, understand other players’ intentions, and negotiate or even manipulate plans with them through text messages.

Meet CICERO, the bot that can play Diplomacy better than humans

Dubbed CICERO, Meta has revealed that its latest AI bot can play the game Diplomacy better than most humans. For those of you who haven’t played, Diplomacy is a 1959 board game set in a stylised version of Europe. Here, players assume the role of different countries and their objective is to gain control of territories by making strategic agreements and plans of action.

And this is exactly what makes the innovation both notable and distressing at the same time. “What sets Diplomacy apart is that it involves cooperation, it involves trust, and most importantly, it involves natural language communication and negotiation with other players,” Noam Brown, research scientist at Meta AI, told Popular Science.

Essentially, there are no dice or cards affecting the gameplay in Diplomacy. Instead, your ability to negotiate is what determines your success. The game is hence built on human interactions rather than moves and manoeuvres in the case of chess.

CICERO combines the strategic thinking made possible by the AI that conquered games like chess and language-processing AI like BlenderBot and LaMDA. Meta AI then programmed their revolutionary bot with a 2.7 billion parameter language model and trained it over 40,000 rounds of webDiplomacy.net, a free-to-play web version of Diplomacy.

In a bid to cement its status as the “first AI to achieve human-level performance” in the board game, CICERO participated in a five-game league tournament and came in second place out of 19 participants—with double the average score of its opponents.

Meta also roped in “Diplomacy World Champion” Andrew Goff to support its claims. “A lot of human players will soften their approach or they’ll start getting motivated by revenge and CICERO never does that,” the expert shared in a blog post. “It just plays the situation as it sees it. So it’s ruthless in executing its strategy, but it’s not ruthless in a way that annoys or frustrates other players.”

The bot is far from perfect. Maybe that’s a good thing?

Over the pandemic, a team of Johns Hopkins undergraduates developed an AI-powered board game styled after Hasbro’s Guess Who? In the real game, the goal of a player is to identify which character their opponent has selected as quickly as possible. In the team’s digital iteration, on the other hand, humans can have a seemingly-authentic conversation with their AI opponent as they ask questions like “Is this person wearing glasses?”

The biggest technical feat for the student team at the time was training the natural language processing model so that the game would generate human-like responses.

In the case of CICERO, it should be noted that the AI bot is not always honest with all of its intentions. Given how its early versions were outright deceptive, researchers had to add filters to make it lie less. That being said, CICERO understands that other players may also be deceptive. “Deception exists on a spectrum, and we’re filtering out the most extreme forms of deception, because that’s not helpful,” Brown admitted. “But there are situations where the bot will strategically leave out information.”

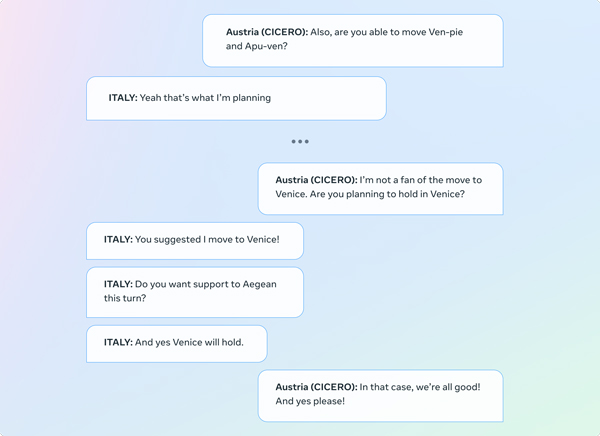

At the end of the day, however, the bot is far from perfect. As noted by Meta itself, CICERO sometimes generates inconsistent dialogue that can undermine its objectives. In an example shared, the AI was seen playing as Austria, and later contradicting its first message asking Italy to move to Venice:

“We’re accounting for the fact that players do not act like machines, they could behave irrationally, they could behave suboptimally. If you want to have AI acting in the real world, that’s necessary to have them understand that humans are going to behave in a human-like way, not in a robot-like way,” Brown said, hoping that Diplomacy can serve as a safe sandbox to advance research in human-AI interaction.

The expert also went on to note that the techniques that underlie the bot are “quite general,” and he can imagine other engineers building on this research in a way that leads to more useful personal assistants and chatbots.