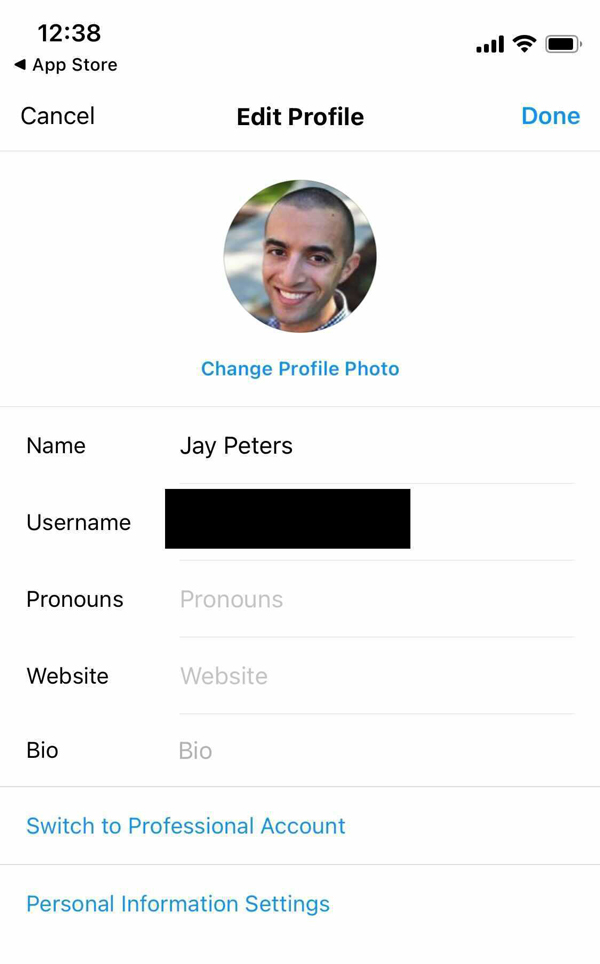

Instagram launches new feature that lets users add pronouns to their profiles

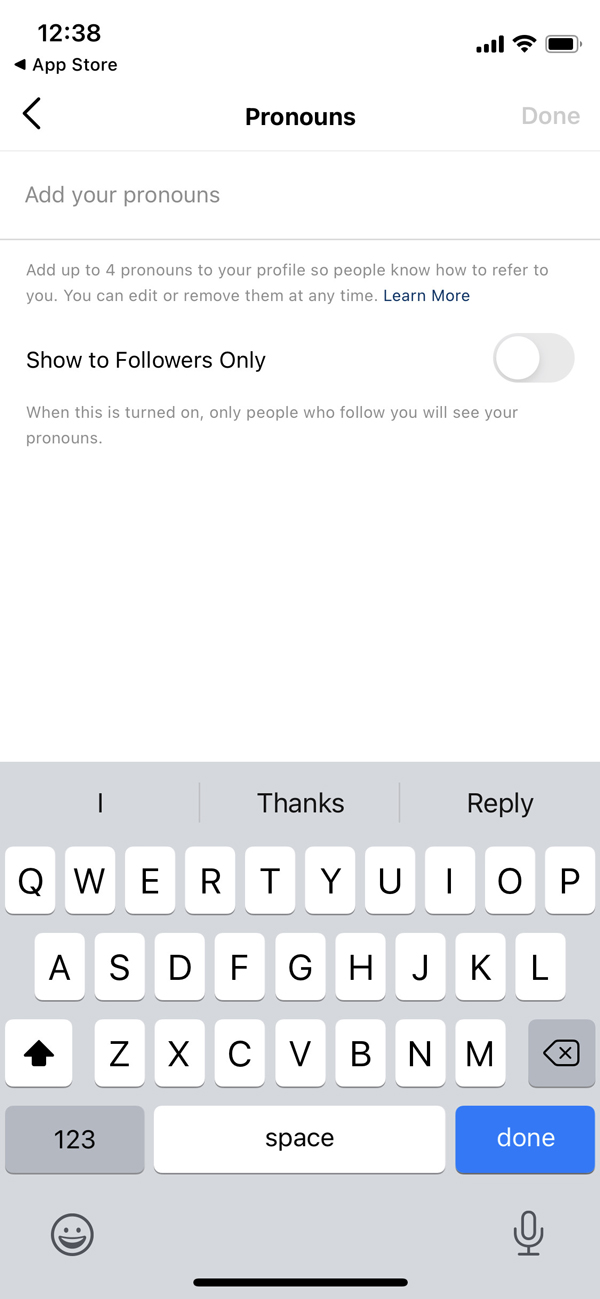

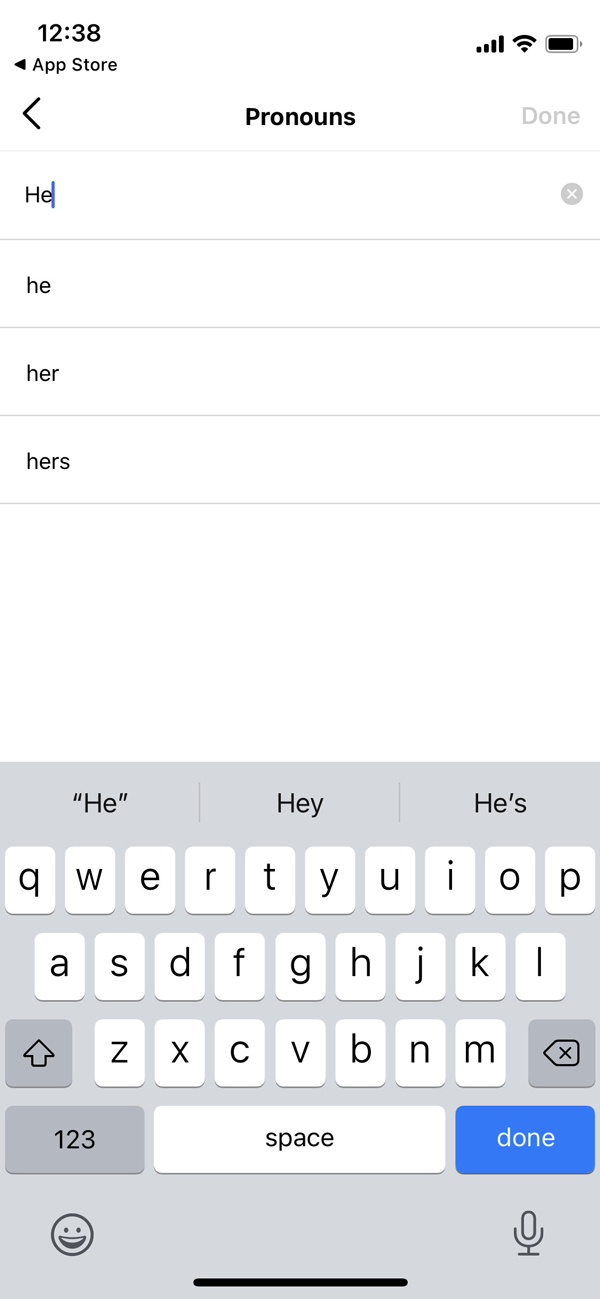

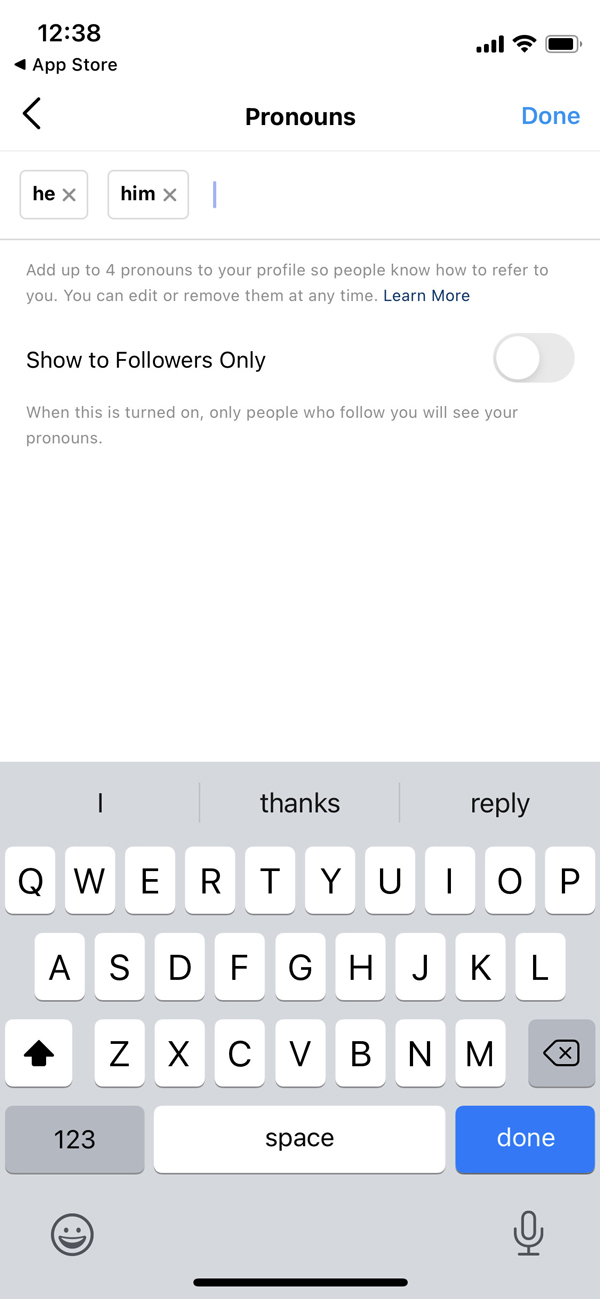

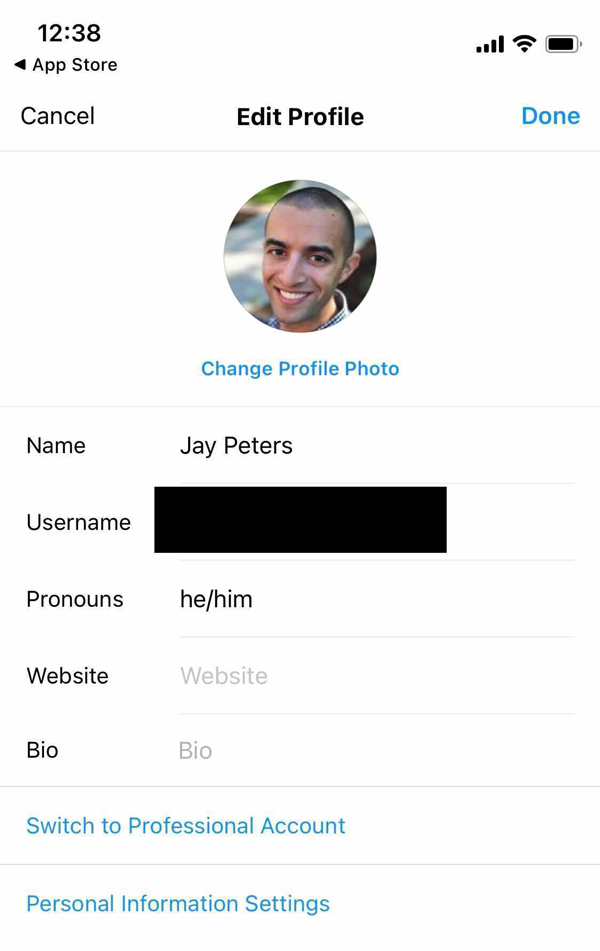

Instagram is finally making it easier to address people by their defined pronouns! Today, 12 May 2021, it revealed on Twitter that it has just launched a new feature that lets users add up to four pronouns on their profile. “Now you can add pronouns to your profile with a new field. It’s another way to express yourself on Instagram, and we’ve seen a lot of people adding pronouns already, so hopefully, this makes it even easier. Available in a few countries today with plans for more,” wrote the social media giant.

Add pronouns to your profile ✨

— Instagram (@instagram) May 11, 2021

The new field is available in a few countries, with plans for more. pic.twitter.com/02HNSqc04R

Users will have the choice to make the pronouns public or make them visible only to their followers, and for now, only a few countries will have access to the feature. The company has not shared its plans to expand it in all markets yet.

As for users under 18, they will have this setting turned on by default. Instagram says people can fill out a form to have a pronoun added, if it’s not already available, or just add it to their bio instead. A couple of members of the Screen Shot team have the pronouns setting already available to them, suggesting it’s live in the UK.

Instagram is certainly not the first platform to also allow users to add pronouns to their profiles. “Dating apps, like OkCupid, have already introduced the feature, as have other apps like Lyft,” explained The Verge. Interestingly, Facebook allowed users to define their pronouns in 2014, although the feature limited people to “he/him, she/her, and they/them.” This appears to still be the case while Instagram will offer more options.