How fashion brand UNLABELED’s wearable technology prevents AI from detecting humans

The integration of AI in today’s society is developing so rapidly, it is in part becoming our norm. Bringing swift and dramatic changes for a supposed good, AI is being used to tackle racial inequality, diagnose medical conditions like dementia, predict suicide attempts, combat homelessness in the UK and even offer you legal advice—that’s right, an AI lawyer. However, while newer technologies have their undeniably progressive qualities, AI also reminds us of the terrifying reality of a George Orwell 1984-level monitoring as well as its quite clearly evident failings.

Such examples include its potential use in the US to spy on prison inmates, Facebook’s AI racial failure in labelling black men as ‘primates’, the economic exploitation of vocal profiling, its continued inability to distinguish between different people of colour and its worryingly uncontrollable future—to name but a few. This small list of incidents is enough for us to beg the question, should we fear AI? Well, a new fashion brand on the rise seems to think so.

UNLABELED (stylised as all-capitalised) is an incredible and exciting new artist group and textile brand that is shaping fashion’s relationship with AI. Founded in Japan—in collaboration with Dentsu Lab Tokyo—creators Makoto Amano, Hanako Hirata, Ryosuke Nakajima and Yuka Sai have developed what is described as a “camouflage against the machines.” Its specially designed garments are constructed with specific patterns that prevent any AI used in the real world from recognising you. Don’t worry, we’ll break down how this all works, but first, let’s look at why the team decided to create the brand.

The details of the creators’ project—found on the Computational Creativity Lab—divulge the reasoning behind the creation. “Surveillance capitalism is here,” it states, “Surveillance cameras are now installed outside the homes as well as in public places to monitor our activities constantly. The personal devices are recording all personal activities on the internet as data without our knowledge.” In the brand’s accompanying project documentation video found on the same page, it further details how this acquired data on us may be used, “The system transforms our everyday behaviour into data and abuses it for efficiency and pursuit of profit.”

For the creators, “the physical body is not an exception” when it comes to AI exploitation of our data. “With the development of biometric data and image recognition technology for identifying individuals, the information in real space is instantly converted into data,” they write. “Thus, our privacy is threatened all the time. In [this] situation, what does the physical body or choice of clothes mean?” From this question, UNLABELED’s fashion camouflage to evade information exploitation was born.

Showcasing a video example of their garments in action, via the Computation Creativity Lab website, a comparison is shown between two individuals—one wearing the garment and one in normal clothes. “[When] wearing [UNLABELED’s] particular garments, AI will hardly recognise the wearer as ‘human’, [while] people wearing normal clothes are easily detected,” the video narrates. And it appears to work. The camera is no longer able to recognise the person wearing UNLABELED. The brand’s name is definitely fitting, but how does it work?

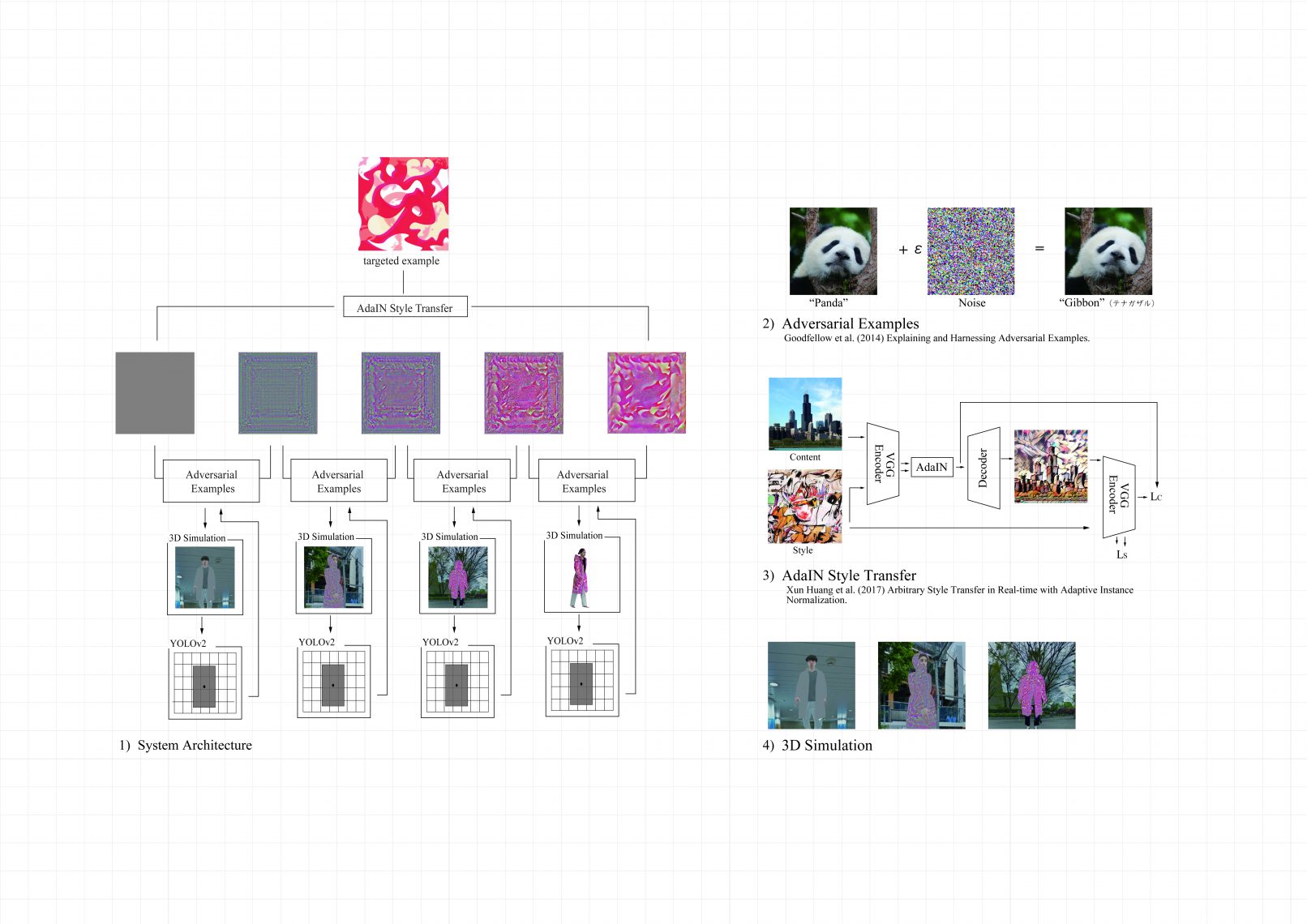

If you guessed AI, you’d be right. UNLABELED is fighting AI with AI. The brand’s team have developed a series of patterns—like the one showcased above—that work to confuse surveillance AI. The patterns were created by another AI model utilised by the inventive creators, “In order to fool AI, we adversarially trained another AI model to generate specific patterns inducing AI to misrecognize, then created a camouflage garment using the pattern,” they narrated in the project documentation video.

This technique is described by UNLABELED as an “Adversarial Patch” or “Adversarial Examples.” This unique method involves adding specific patterns—or small noises naked to the human eye—to images or videos with the intention of inducing false recognition in the AI. When successful, the resulting patterns created from this particular approach causes AI to misrecognize shapes and objects. UNLABELED notes that this technique is currently widely used as part of research to actually improve on the shortcomings of surveillance but has in turn flipped this shortcoming to its products’ benefit and “protect our privacy.”

“Once the adversarial pattern is made, we lay them out onto the 2D-pattern. Then, the pattern [is] printed onto plain polyester blend fabric with transcription. After printing, we follow the general garment production procedure,” UNLABELED states. The brand has even developed a skateboard in the same AI-evading patterns. The products are available for purchase on the brand’s website.

While such fashion technology is not available on a wide scale, it does indicate the continuous positive shift against heavy monitoring—I mean, we all hate it when those adverts pop-up a few minutes after we’ve merely mentioned the name of a specific product. I know you’re listening to me, Apple. However, there’s two sides to every coin, even when it comes to AI. While this technology could better aid us to avoid surveillance, it could better aid anyone to avoid surveillance, if you know what I mean. For my own perspective however, it’s another sign of a generation rebelling against the norm—and I fucking love it.