I spent a day on the Chinese version of TikTok Douyin and I was surprised by what I found

Endless scrolling on TikTok has left me tired and bored. In need of something new to spice up my social feeds and provide the emotional fulfilment that only short-form video content can do, I decided to try out the Chinese version of the app, Douyin.

We hear a lot of rhetoric in the West about oppressive regimes in the East, and its extensive limits on Chinese citizens’ personal freedom. As I prepared for my digital adventure, it was safe to say that I was definitely intrigued to see how my experience on Douyin might differ from TikTok.

TikTok itself is far from a wholly safe experience, having been cited as giving rise to depression in teenagers, and contributing to a decline in children’s self confidence. SCREENSHOT’s own investigation into the app highlighted just how easy it was to find yourself radicalised by the ever evolving For You Page’s algorithm.

Let’s first lay down the parameters for my journey into the video void. We’re unfortunately unable to make it out of the shallow end of the For You Page (FYP), owing to the fact that you need a Chinese ID to create an account. In other words, this escapade will be untailored as I’m unable to get into the underbelly of the app—if one even exists on Douyin.

Also, I lack even a basic understanding of Mandarin, so this reality (paired with the lack of access to a proper account) should make for a very interesting tour across the front-facing videos from the Chinese mainland. What is being shown to fresh faces who are scrolling through the app for the first time? Let’s get to it.

Douyin scrolling begins

The first thing I’m greeted with as I load up the web version of the app (which is the easiest way onto Douyin) is what looks like a music video directed by Wong Kar-wai. A fisheye lens follows a model as she dances and poses around her city. Nothing massive to report as of yet.

Scrolling onwards, I’m bombarded by clips of random early noughties trash flicks, with a calm-voiced narrator who I assume is explaining the plot of the film to viewers. This reminds me a lot of the content I once saw using a fresh account in the West.

The kind of content that begins to pop up the most on my initial scroll is strange videos of very idealised models looking moody among a variety of different backdrops. It’s hard to tell if these are ads, scenes from music videos or just straight-up influencer montages. I scroll through.

After sampling some anime clips, short videos displaying scenes of cherry blossoms in the wind, and intricate store signs for different shops, I see something quintessentially different to what I’d expect to come across on TikTok.

A short clip shows several men sitting and enjoying what appears to be a rooftop barbeque, liquor in hand, singing as the camera pans across the sizable banquet before them. Why don’t we sit around singing with the bros in the West? This one video definitely begins to steer me down a path which is much more wholesome than I was initially expecting.

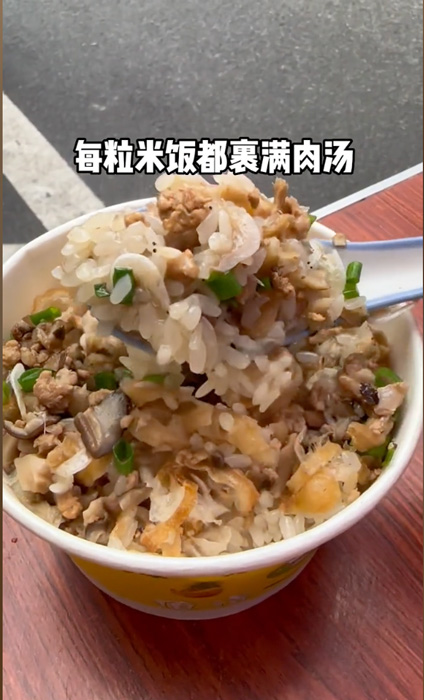

The journey continues. Cooking videos have started to appear, and honestly, Western TikTok’s answer to food porn has absolutely nothing on Chinese street food.

Something that becomes increasingly apparent the more I scroll, is just how similar Douyin content is to what I’ve been used to over on TikTok. Yes, there are some cultural differences, but there are also some overwhelmingly universal themes: food videos, cute pet reactions, and movie clips cut up into short, easily digestible snippets.

On the other hand, the choice of audio is vastly different. Most background tracks are wholesome, uplifting songs, and while I don’t understand the lyrics, I can only assume the vocals are of a pure nature.

This next video of an all-boy dance troupe killing it has to be seen to be believed. I’m completely obsessed with how truly camp and high-energy this is.

Suggestive content on Douyin

This next video caught my immediate attention. Initially, I thought this was a sketch and so I tried to follow along using Google Translate’s photo translation capabilities. From what I was able to decipher, a man picks up what looks to be three prostitutes and attempts to sell one to a friend.

It might have been the shoddy translation from Google, but it became quickly apparent that this wasn’t actually a sketch at all and more of a strange scenario, perhaps designed to promote positive values among China’s citizens? From what I gathered, there seemed to be a message in here somewhere about self-worth and respect as the friend ends the video seemingly in love with one of the girls, while the pimp in a gaudy gold chain is dismayed at losing a girl.

I wasn’t able to fully come to grips with what was actually going on in the video, but it did strike me as vastly different to the content we get on our own version of the app.

In a country that’s globally known for the strict morality guidelines it imposes on its citizens, I also wasn’t expecting to see these kinds of suggestive model videos show up on my feed either.

Not long after, it became apparent that my feed was failing to evolve in any meaningful direction without a certified account, and thus my time on Douyin was officially at an end.We were a long way from home, but it really didn’t feel too alienating—outside of not being able to understand anything of course.

I can only speculate at how different the app would be if I had the ability to manipulate its algorithm properly. Either way, my surface level observation of the platform showed a great similarity between the two versions of the app. Dogs, dancing and dining made up the bulk of the content, with the biggest difference being the music that partnered with my overall visual experience.

If you were interested in trying China’s version of the app, you won’t get far without a Chinese ID. However, hopefully this mini experiment may have given you a suitable peek into what else is out there, and potentially even an urge to explore yourself.