Introducing xenobots, a group of ‘living’ robots that can reproduce with the help of AI

As the world of technology becomes more advanced everyday, new discoveries are abundant and impact just about every area of our lives. From pint-sized robots being introduced in South Korean schools to predicting types of synthetic drugs before they even hit the market, AI is evolving, and fast. With the possibility of robot lawyers on the horizon, it makes you wonder what else AI will allow humanity to achieve. Xenobots are definitely one of these wonderful advancements. Let me explain.

Researchers have recently discovered what they’re calling tiny ‘living’ robots (synthetic lifeforms known as ‘xenobots’) made from thousands of frog embryos, can reproduce in an unprecedented and unimaginable way. A peer-reviewed study published in the Proceedings of the National Academy of Sciences (PNAS) earlier this week, Monday 29 November, summarised everything you need to know about these bizarre yet promising bots.

The small blobs were first made public to the world in 2020, after experiments showed that they could move, work together in groups and self-heal. The University of Vermont (UVM)—where these robots are currently being developed—now claims that xenobots are “entirely new life-forms,” as previously reported by CNN. The university conducted the research alongside Tufts University’s Allen Discovery Center, which is based in Massachusetts.

CNN noted that xenobots could even help in the fight against climate change by cleaning up radioactive waste and collecting microplastics from the oceans. And that’s not all, the outlet also stated that the robots could be touted as medical miracles by transporting medicine inside human bodies or travelling into our arteries to scrape out plaque. Since we already know they can survive in aqueous environments without the need for additional nutrients over long periods of time, xenobots could become a serious contender for internal drug delivery. But what are they exactly?

What are xenobots?

Well, although they’re designed from frog stem cells, xenobots are technically robots. Now, these robots aren’t made from little constructions of silicon and metal like you’re probably imagining—oh no, no, no, they come from clusters of stem cells. The name xenobot may sound strange and futuristic at first, but it’s derived from the name of the frog the stem cells come from: the African clawed frog, also known as Xenopus laevis.

Xenobots are tiny (I mean teeny tiny) less than a millimeter (0.04 inches) wide, which makes them small enough to travel inside the human body. They may come from genetically unmodified frog embryos, and they have no digestive system or neurons, but they have a lot of surprising qualities: they can walk and swim, survive for weeks without food, and work collaboratively in groups. Pretty scary how advanced they are, right?

Back in 2020, Joshua Bongard, a computer scientist and robotics expert at UVM and one of the co-lead authors of the study told The Independent, “They’re neither a traditional robot nor a known species of animal. It’s a new class of artifact: a living, programmable organism.”

Since they come from stem cells, xenobots present a great deal of unique and interesting questions already in the field of robotech, the first being, ‘What does robot really mean?’. Pondering on that exact question, lead author of the study and professor of Biology at Tufts, Michael Levin told Gizmodo, “Defining ‘robot’ was never easy, although older technologies sort of obscured that fact and made it seem like we knew what a good definition of ‘robot’ was and how one differed from amoebas, bacteria, fish, humans, etc.”

From the more recent news shared on xenobots, Bongard and his collaborators seem to have breached further uncharted territory, giving the ‘living’ robots the ability to self-replicate and spawn new versions of themselves with the help of AI.

“They definitely do not grow into frogs, they actually keep the form that we impose on them. And they look and act in ways very different from normal frogs,” he told The Guardian.

But, before we get to the latest exciting scientific finding about xenobots, it’s important we first learn more about how they came to be, as you must be curious. Stem cells are unique little things, since they are unspecialised cells that have the ability to develop into different cell types. For all our body’s needs, stem cells are the helping hands to build and rebuild whatever we need them to—including blood cell regeneration in vagina vampire facials for the coveted back-to-back O’s.

Previously, the researchers scraped out the living stem cells from frog embryos, and left them to incubate. The cells then began to work on their own and bond to form structures, while pulsing heart muscle cells allowed the robot to move on its own using hair-like projections called cilia. Xenobots even have self-healing capabilities; when the scientists sliced into one robot, it healed by itself and kept moving. Though they do eventually biodegrade (insert sad face) it is still remarkable that their lifespan can be extended with a nutrient-rich environment.

How are xenobots capable of reproduction?

Our third xenobots paper was published today in PNAS.

— Sam Kriegman (@Kriegmerica) November 29, 2021

In a nutshell: they can now self-replicate.

w/ @DougBlackiston, @drmichaellevin, @DoctorJosh

More info: https://t.co/ySLBHeaGlL pic.twitter.com/vTvS2E3PoG

Now that we’ve caught up on everything there is to know about the bots, let’s get into what the newest research tells us about them. In the study, researchers from the two universities mentioned above—as well as Harvard University’s Wyss Institute for Biologically Inspired Engineering—reported the big news about the xenobots being able to reproduce.

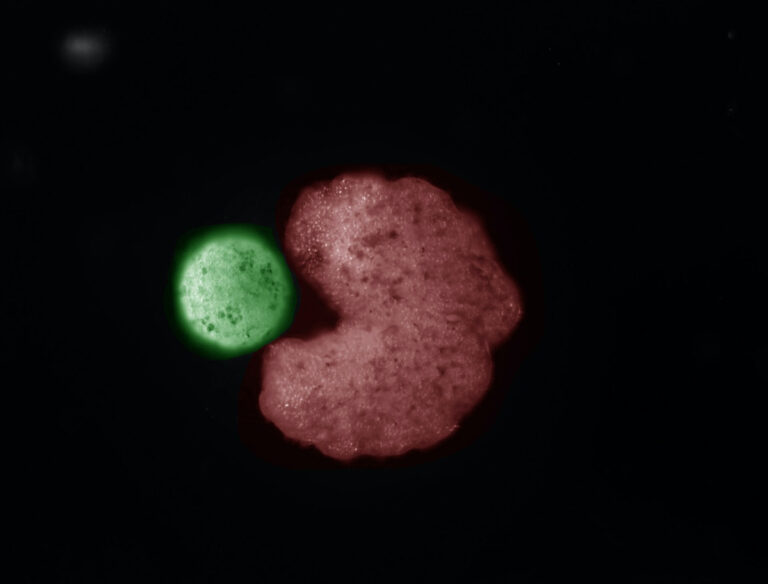

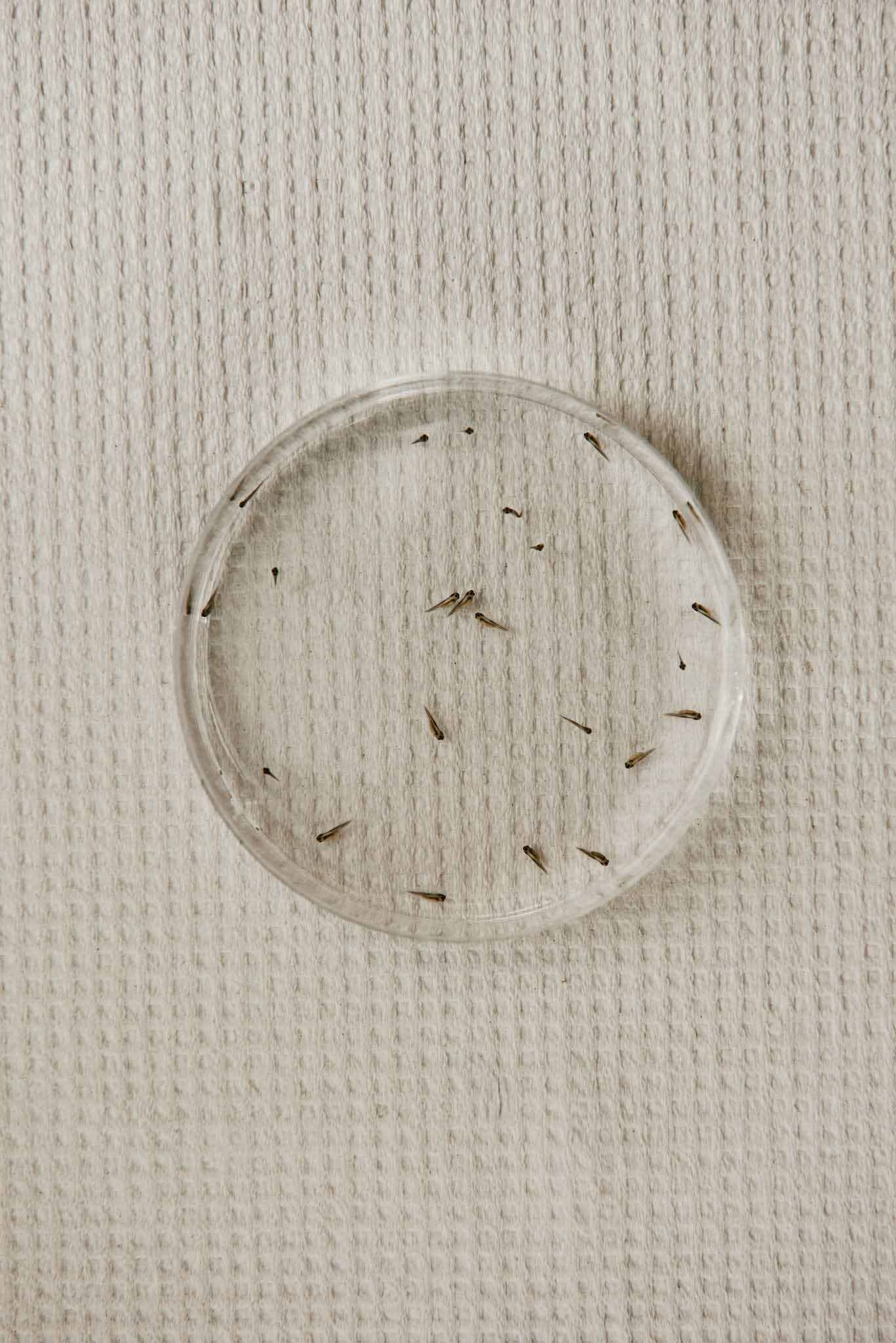

The team observed the living robots, which are gathered from around 3,000 stem cells each. The Guardian reported that the discovery actually came from watching the robots move around in a solution of “room temperature pond water” placed in a petri dish around loose cells from frog embryos. The bots then move in a “corkscrew pattern” and crash into the loose cells forming smushed piles. The team also found that as the cells are sticky—and if a pile is large enough—can form a new, moving cluster over five days, making a xenobot baby.

In an interview with CNN, Levin admitted that this method, called kinematic replication—the process of an organism self replicating—left him “astounded.” But then there is the hitch of biodegradation. “It turns out that these xenobots will replicate once, one generation, they will make children. But the children are too small and weak to make grandchildren,” said Bongard.

Where does the AI come in?

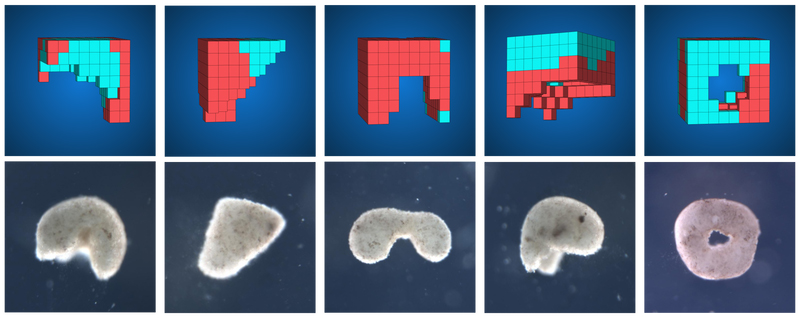

The expert went on to share with CNN that their bizarre behaviour was initially perceived as rare and situation-specific. However, the team used the supercomputer to test billions of body shapes to determine the sweet spot for ideal collection.

According to Bongard, “The AI didn’t program these machines in the way we usually think about writing code.” Instead, one of the forms they found was very reminiscent of Pac-Man. Yes Pac-Man, the yellow chomping bit-game character from your childhood. Well, just like Pac-Man’s form is great for gobbling up ghosts, the collection of C-shaped xenobots were the most effective for sweeping up the loose stacks of stem cells and forming multiple generations of xenobots.

With this integration of AI, when enough of these extra cells were stacked together, the aggregated heap of about 50 cells became a kind of descendant to the xenobot organism. And it gets even crazier, the new offsprings were even capable of swimming by themselves. In doing so, they piled up their own offspring as replication continued for further generations—thereby making “functional self-copies,” according to the paper. “This form of perpetuation, previously unseen in any organism, arises spontaneously over days rather than evolving over millennia,” the researchers went on to state.

Now, before those cogs start turning and you reach for your tin foil hat, the team of researchers have assured that the living machines were entirely contained in a lab and extinguished. I know the prospect of self-replicating biotechnology is definitely not a light subject, but I think we can be fairly certain this won’t lead to a robopocalypse anytime soon—remember, they are biodegradable in the end. The research is also under the watchful eyes of ethic experts.

On the other hand, all of this news is incredibly exciting for researchers, “There are many things that are possible if we take advantage of this kind of plasticity and ability of cells to solve problems,” Bongard enthusiastically summed up with CNN.