Gamers to face wake-up call as Riot Games and Ubisoft team up to tackle toxicity and abuse

If you’ve ever played an online game, there’s a guarantee that you’ve faced some sort of harassment—be it teammates trolling or having someone take out their frustrations on you. People get worked up playing games. It happens in real-life sports, and esports are no different. It’s a problem, a massive one.

These pseudo-anonymous online spaces have been host to trash talk and flame since their inception, but today, online gaming’s reach spans a much wider audience. The once-playful trolling has since turned into vile abuse and hate speech—a problem that is rampant in the gaming industry, from in-game team chat to Twitch streams. It’s hence no surprise to see developers now having to obsessively moderate these online spaces to keep gameplays positive and push abuse out of the picture.

Gaming is no longer a ‘no limit’ space for pre-pubescent boys to expel their hormonal rage. The industry is expected to reach a value of $197 billion in 2022, and while all are welcome in what could have been the 21st century’s most inclusive and unifying business, the entertainment complex is still being let down by destructive individuals.

It’s important for experiences to stay friendly and clean, not only for developers and business but also for players who want to feel respected and valued in the communities built around their favourite games. Outside of basic moderation and profanity filters, gaming industry giants are having to come together to create new ways to improve the user experience and change this toxic bedrock—and at the forefront of this are industry giants Riot Games and Ubisoft.

Riot is already part of the toxicity problem

It’s a widespread view that Riot may be the biggest culprit behind toxicity online. League of Legends, the developer’s biggest game, spawned its own Netflix animated series titled Arcane last year, and at the time of writing, has an average monthly player base of about 150 million people. Because of the game’s easy-to-access model, people can very easily set up new accounts, and lose them with little consequence, spurring users’ desire to unleash their toxicity while playing the game.

The very nature of League of Legends’ format is integral to its issues with frustrated players. As if that wasn’t enough, Riot was also the subject of numerous exposés and lawsuits between 2018 and 2021 as a result of a negative, sexist, and juvenile working environment.

What is ‘League of Legends’?

For those of you who don’t know, League of Legends is a multiplayer online battle arena (MOBA) which has ten players go head to head in a five versus five (5v5) lane-based battle, with the end goal of getting stronger over the course of the game and ultimately dominating the opposing team’s base.

These games can take anywhere from 30 minutes to an hour, a time investment that players don’t often enjoy losing. A lot of the frustration and anger that results from online toxicity is a direct result of these energy-consuming formats. And this pre-requisite also explains why League of Legends players are often recommended to ‘touch grass’ when they declare their love for the game.

How Riot and Ubisoft are tackling toxic gamer culture together

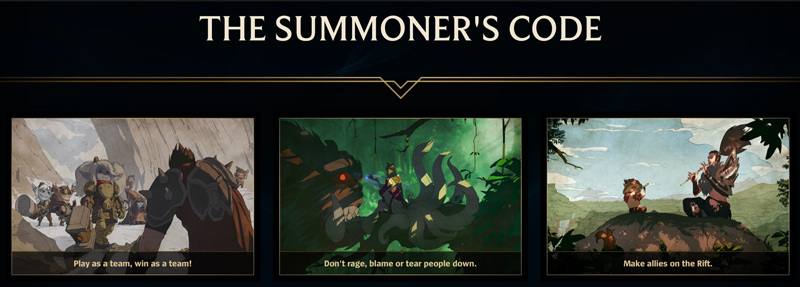

So, Riot is now trying to tackle the problem its very own game exacerbated. How, you ask? Well, in the beginning, it tried to introduce stricter rules with the help of a harsher content filter, but when that didn’t do much, the developer decided to force its players to agree to a ‘Summoner’s Code’—a sort of social contract the company holds all players accountable to—before they can even start playing the game.

Activision Blizzard, another controversial game studio, has seemingly taken a similar path and now requires players to sign a social contract when logging into any of its own creations. How effective this ‘oath of good nature’ is however, is a totally different story.

Most online games today do maintain very strict punishment policies to help encourage players to not commit any wrongdoings. Riot also now records voice chat in its free-to-play tactical shooter Valorant, in a bid to further improve and moderate its systems. The industry is moving at a steady pace towards greater inclusivity and a crackdown on negative in-game behaviour, but Riot still sees work to be done.

Here’s where Ubisoft—the developer behind the globally recognised Assassin’s Creed and Rainbow Six franchises—comes in. A company equally committed to creating safe and accessible spaces for gamers, it’s decided it can’t tackle the future of gaming interaction alone. A partnership between these two giants is a huge deal and is likely going to change the face of gaming moderation forever.

What is the ‘Zero Harm in Comms’ research project?

On 16 November, Riot and Ubisoft released a public statement, announcing a pledge to work together to create a more “positive gaming community.” This is supposedly the “first step in a cross-industry project” that’s going to improve the experience for all gamers. How are the two companies going to achieve this? With AI, duh. They hope the team-up will allow both sides to develop a database for gathering in-game data to better train AI-based preemptive moderation tools.

What this means is that, further down the line, there’s hope both companies will be able to identify negative behaviour and attitude before it’s even left your keyboard. The ultimate aim is to mitigate disruptive behaviour in-game. Riot has reassured the public that any individual’s identifiable data will be removed before it is shared—keeping everyone’s privacy safe. Until, you know, it gets leaked.

Ubisoft and Riot are both members of the Fair Play Alliance, an organisation already trying to tackle these problems today. The ambition seems sincere, and I must say, it is refreshing to see titans within the industry trying to make more active change for gaming communities. The days of in-game abuse might actually be behind us. It should also be noted that this research project is only the first phase of the mission—findings should be ready to be shared with the public sometime in 2023.

How much really needs to change and what do both sides have to say?

Moderation and free speech can be a divisive topic for gamers—heck, for people in general—and many within the community have theorised whether we should use gaming as a tool to alleviate our pent-up frustrations. They play with the idea that, behind a screen, nobody is really getting hurt. The same goes for the conversation surrounding toxic practices like teabagging and rape in the metaverse.

Others would argue that basic human decency should be sought after and expected everywhere, even online. It’s a topic that has long divided gamers and the internet alike. In a bid to set the record straight, I asked several players from a variety of gaming backgrounds for their insights into the dilemma.

Cameron, 23, a casual gamer who plays mostly on console, stated: “It’s really not that deep, you can just mute people on games. But this is coming from someone who doesn’t go deep into massively multiplayer online games (MMOs) or competitive games, where you need a positive relationship with your teammates. I mostly play on consoles where people are just nowhere as bothered or pressed to get all up in other people’s shit. I guess there’s a balance and a limit to what should be allowed.”

On the other hand, Aries, a 23-year-old trans PC gamer who enjoys a spectrum of gaming, emphasised the need for users to take responsibility for what they say online: “Freedom of speech isn’t freedom to say dumb stuff on the internet or in games without consequence. You’re free to use slurs and hateful language in games but you wholly deserve the consequences for doing so. Video games should be a space for everyone to escape from reality, and if your actions are negatively impacting that, you should be punished for doing so. I am completely on the side of game developers who want to improve everyone’s gaming experience by creating safer and friendlier spaces for everyone.”

There are also, of course, generational gaps. Maxim, 27, is an older gamer who has been using these platforms and consoles since the time of unmoderated and unfiltered spaces. “Insulting people has long been a tradition in games. Trying to curb it is certainly noble and could lead to a more accessible space,” Maxim shared. “I agree with this but I also strongly feel that over-moderation erases something that is very fundamental to games—a space to release anger and frustration. Without the ability to express and vent some of these frustrations, I think that the feelings won’t disappear, but will instead be channelled into more radical communities.”

“Gaming moderation is important to people who just want to have fun. I don’t want to face bullying or slurs every second I’m in the game but at the same time, I believe there should be an opt-in to ‘toxic chat’ so that those who want to go wild have the freedom to do so. Trash-talk for me is one of gaming’s greatest joys, and even though people definitely take it too far, I like that there’s an outlet for things you’d never ever dream of saying in the real world,” the avid gamer added.

It does seem as though, while some gamers welcome this move, others remain hesitant. Stefan, 22, is a committed PC gamer who also worries greater restrictive features will have too harsh of an impact on the nature of gaming, “Trash talk is definitely a part of gaming and I’d be sad to see it fully erased, so I hope that we’ll still have the freedom in the future to rib and mess with people. You might not like it but it really is a deep part of things, especially for those with a competitive edge. Being full-on toxic is terrible though and a massive no-no for me.”

A brighter future for gamers or a futile endeavour?

Honestly, the very nature of the internet and gaming as a whole suggests to me that the problem, in its entirety, will never truly be resolved. Online activity can separate you from your real-life identity and because of this, there’ll always be those who choose to exercise behaviour that would never be tolerated outside of these interactive worlds. Of course, there are many of us who bridge the gap between our real and online identities, but trolls don’t usually go for that.

Flaming and ribbing is an integral part of gaming and, let’s be honest, having the opportunity to flex on your opponent after winning is always a great feeling. However, this triumph or victory should never come at the expense of haphazardly spewing hate and abuse online to anyone you please.

The gaming world has changed exponentially since its heyday in the 1990s and early 2000s. It’s bigger, better, broader, and aims to be more inclusive. Things have changed, which is why the attitudes and language of gamers need to undergo the same process.

As someone who believes that anything that pushes the gaming sphere towards a more welcoming environment is a win, I’m excited to see what comes out of the research project between Riot and Ubisoft. After all, each of us deserves the freedom to unwind and relax without the fear of targeted abuse.